Thank you for subscribing!

Ever wonder how websites get listed on search engines and how Google, Bing, and others provide us with tons of information in a matter of seconds?

The secret of this lightning-fast performance lies in search indexing. It can be compared to a huge and perfectly ordered catalog archive of all pages. Getting into the index means that the search engine has seen your page, evaluated, and remembered it. And, therefore, it can show this page in search results.

Let’s dig into the process of indexing from scratch in order to understand:

- How the search engines collect and store the information from billions of websites, including yours

- How you can manage this process

- What you need to know about indexing site resources with the help of different technologies

What is search engine indexing?

To participate in the race for the first position in SERP, your website has to go through a selection process:

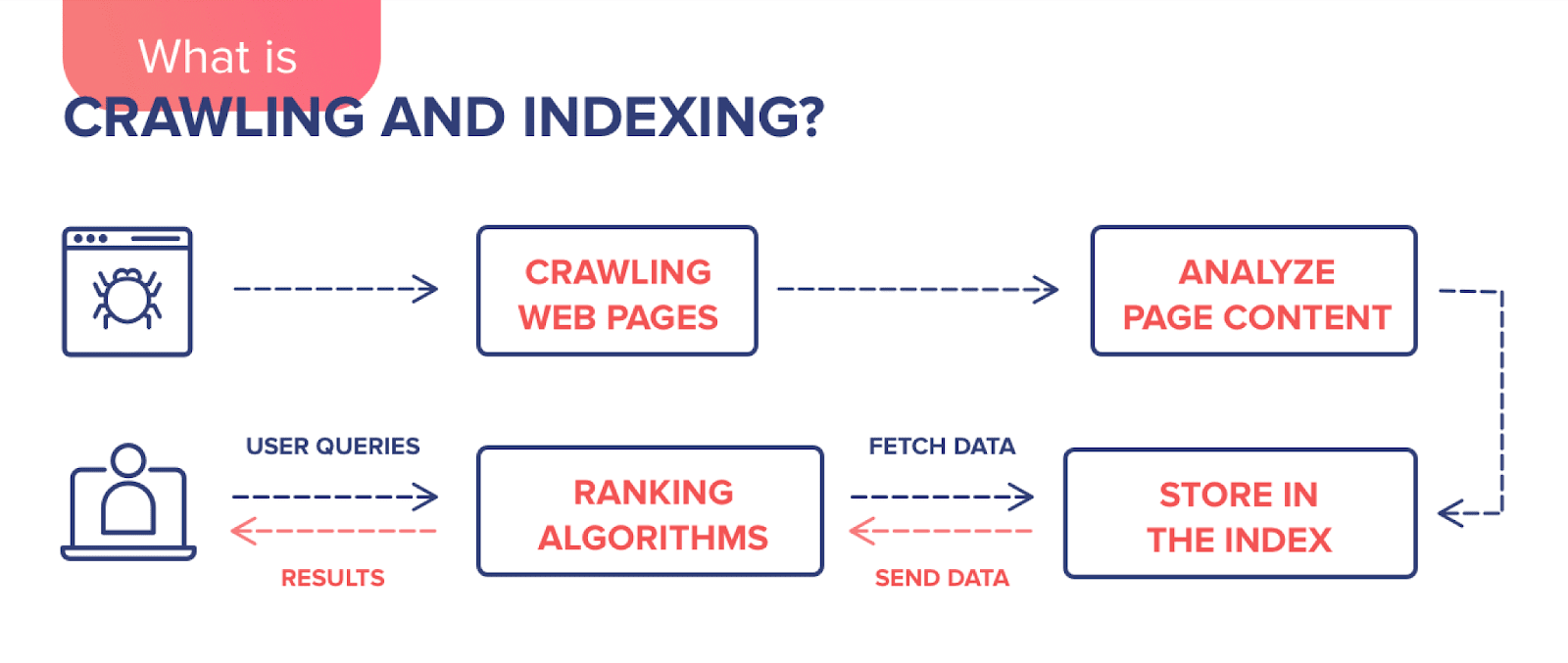

Step 1. Web spiders (or bots) scan all the website’s known URLs. This is called crawling.

Step 2. The bots collect and store data from the web pages, which is called indexing.

Step 3. And finally, the website and its pages can compete in the game trying to rank for a specific query.

In short, if you want users to find your website on Google, it needs to be indexed: information about the page should be added to the search engine database.

The search engine scans your website to find out what it is about and which type of content is on its pages. If the search engine likes what it sees, it can then store copies of the pages in the search index. For each page, the search engine stores the URL and content information. Here is what Google says:

“When crawlers find a web page, our systems render the content of the page, just as a browser does. We take note of key signals—from keywords to website freshness—and we keep track of it all in the Search index.”

Web crawlers index pages and their content, including text, internal links, images, audio, and video files. If the content is considered to be valuable and competitive, the search engine will add the page to the index, and it’ll be in the “game” to compete for a place in the search results for relevant user search queries.

As a result, when users enter a search query on the Internet, the search engine quickly looks through its list of scanned websites and shows only relevant pages in the SERP, like a librarian looking for the books in a catalog—alphabetically, by subject, and by the exact title.

Keep in mind: pages are only added to the index if they contain quality content and don’t trigger any alarms by doing shady things like keyword stuffing or building a bunch of links from irrefutable sources. At the end of this post, we’ll discuss the most common indexing errors.

What helps crawlers find your site?

If you want a search engine to find out about your website or its new pages, you have to show it to the search engine. The most popular and effective ways include: submitting a sitemap to Google, using external links, engaging social media, and using special tools.

Let’s dive into these ways to speed up the indexing process:

1. Submitting your sitemap to Google

To make sure we are on the same page, let’s first refresh our memories. The XML sitemap is a list of all the pages on your website (an XML file) crawlers need to be aware of. It serves as a navigation guide for bots. The sitemap does help your website get indexed faster with a more efficient crawl rate.

Furthermore, it can be especially helpful if your content is not easily discoverable by a crawler. It is not, however, a guarantee that those URLs will be crawled or indexed.

If you still don’t have a sitemap, take a look at our guide to successful SEO mapping.

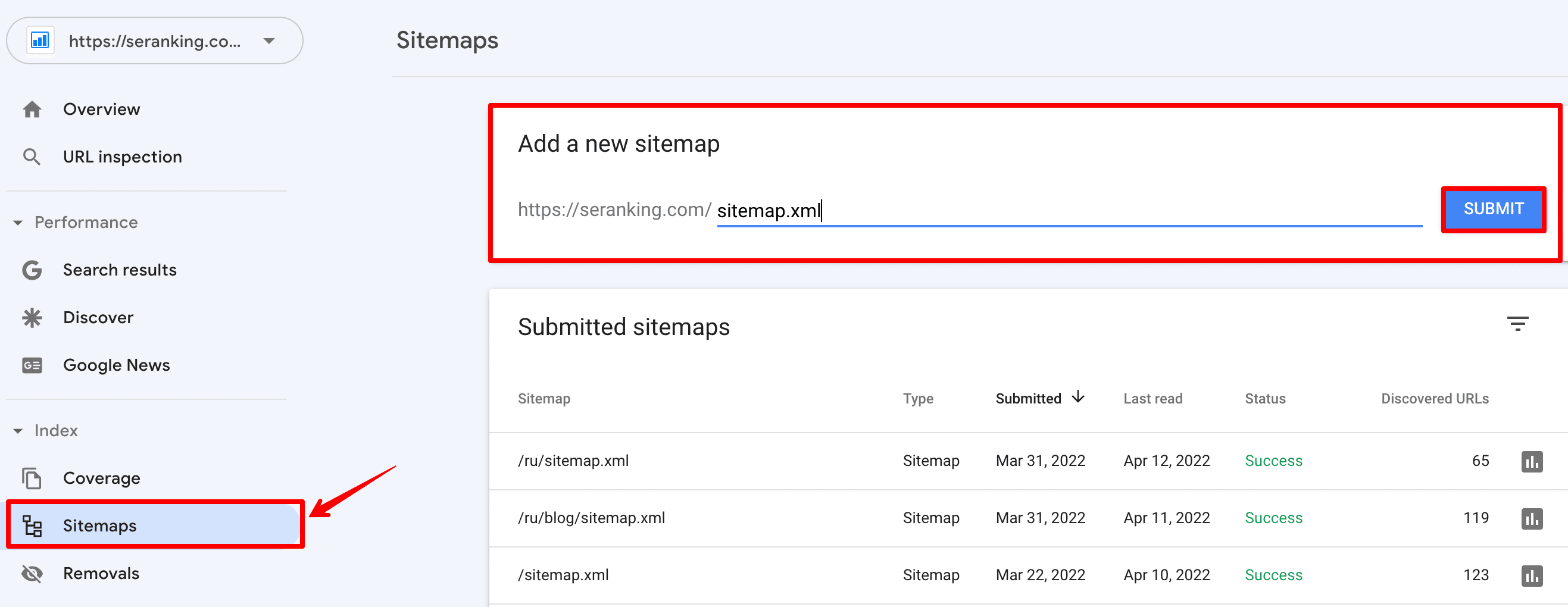

Once you have your sitemap ready, go to your Google Search Console and:

Open the Sitemaps report ▶️ Click Add a new sitemap ▶️ Enter your sitemap URL (normally, it is located at yourwebsite.com/sitemap.xml) ▶️ Hit the Submit button.

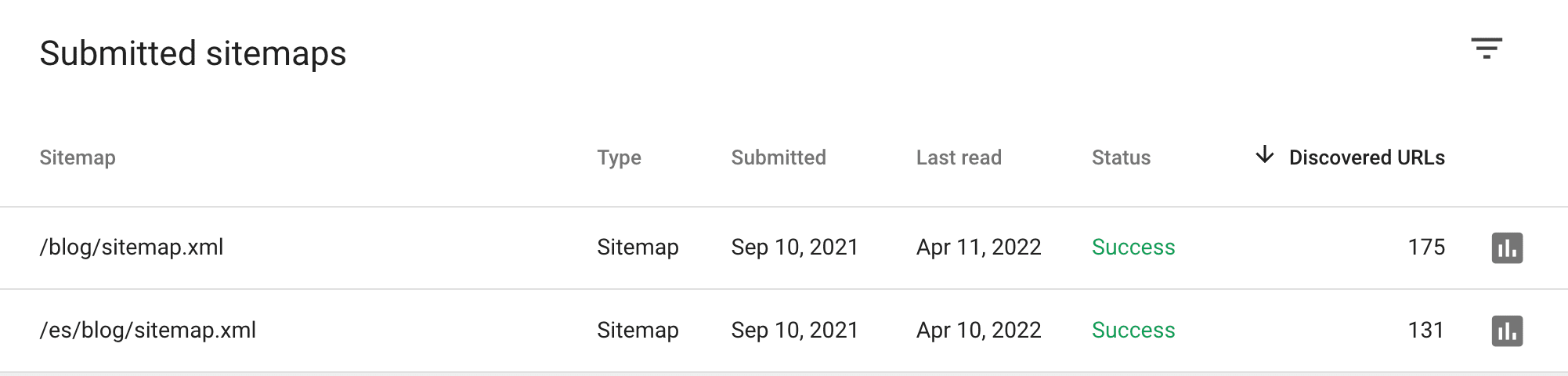

Soon, you’ll see if Google was able to properly process your sitemap. If everything went well, the status will be Success.

In the same table of your Sitemap report, you’ll see the number of discovered URLs. By clicking the icon next to the number of discovered URLs, you’ll get to the Index Coverage Report. Below, I will tell you point by point how to use this report to check your website indexing.

2. Adding links to external resources

Backlinks are a cornerstone of how search engines understand the importance of a page. They give a signal to Google that the resource is useful and that it’s worth getting on top of the SERP.

Recently, during Google SEO office hours, John Mueller said, “Backlinks are the best way to get Google to index content.” In his words, you can submit a sitemap with URLs to GSC. There is no disadvantage to doing that, especially if it’s a new website, where Google has absolutely no signals or no information about it at all. Telling the search engine about the URLs via a sitemap is a way of getting that initial foot in the door. But it’s no guarantee that Google will pick that up.

John Mueller advises webmasters to cooperate with different blogs and resources and get links pointing to their websites. That probably would do more than just going to Search Console and saying I want this URL indexed immediately.

Here are a few ways to get quality backlinks:

- Guest posting: publish your high-quality posts with necessary links on someone else’s blog and reputable websites (e.g., Forbes, Entrepreneur, Business Insider, TechCrunch).

- Creating press releases: inform the audience about your brand by publishing noteworthy news about your company, product updates, and important events on different websites.

- Writing testimonials: find companies that are relevant to your industry and submit a testimonial in exchange for a backlink.

- Social Media Linking: don’t forget about Facebook, Instagram, LinkedIn, and YouTube—add links to your website pages in posts. Social media is a cost-effective tool that can help drive traffic to your site, boost brand awareness, and improve your SEO. By the way, we’ll talk about social signals below.

- Other popular strategies to get backlinks are described in this article.

3. Improving social signals

Search engines want to provide users with high-quality content that meets their search intent. To do so, Google takes into account social signals—likes, shares, and views of social media posts. All of them inform search engines that the content is meeting the needs of their users, and is relevant and authoritative. If users actively share your page, like it, and recommend it for reading, search bots will not get past such content. That’s why it’s very important to be active in social media.

Mind that Google says that social signals are not a direct ranking factor. Still, they can indirectly help with SEO. Google’s partnership with Twitter, which added tweets to SERP, is further evidence of the growing significance of social media in search rankings.

Social signals include all activity on Facebook, Twitter, Pinterest, LinkedIn, Instagram, YouTube, etc. Instagram lets you use the Swipe Up feature to link to your landing pages. With Facebook, you can create a post for each important link. On YouTube, you can add a link to the video description. LinkedIn allows you to raise your website and company credibility. Understanding the individual platforms you’re targeting lets you better tailor your approach to maximize your website effectiveness.

There are a few things to remember:

- Post daily: regularly updated content in social channels tells people and Google that your website and company are active.

- Post relevant content: your content should be about your company, industry, and brand—this is what your followers are waiting for.

- Create shareable content: memes, infographics, and various researches always get a lot of likes and reposts.

- Optimize your social profile: make sure to add the link to your website to the account info section.

As a rule of thumb, the more social buzz you create around your website, the faster you will get your website indexed.

4. Using add URL tools

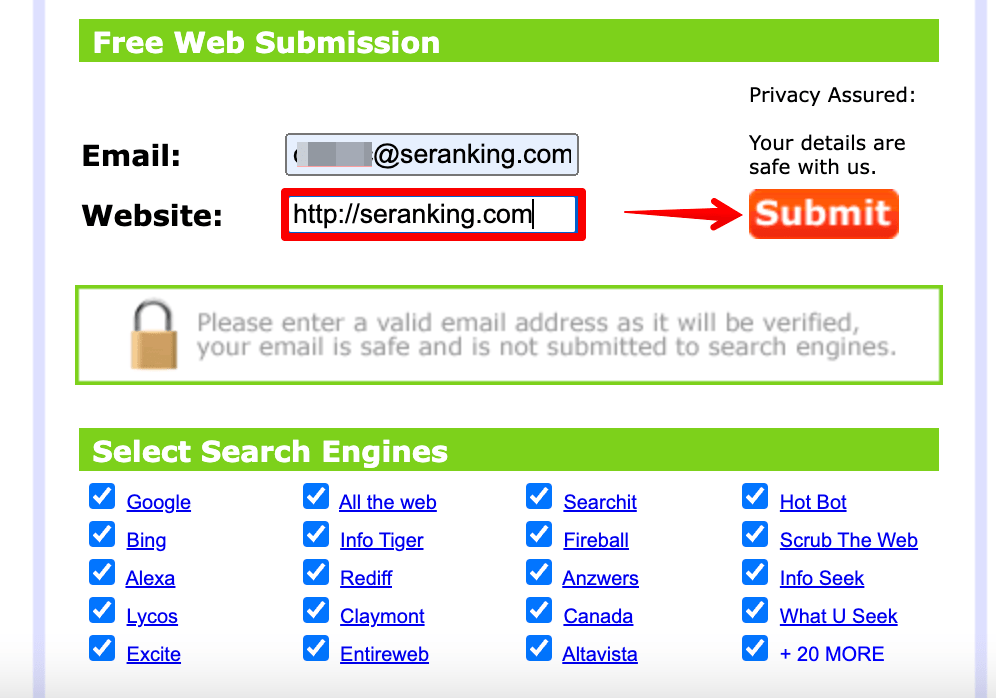

Another way to signal about a new website page and try to speed up its indexing is using add URL tools. It allows you to request the indexing of URLs. This option is available in GCS and other special services. Let’s take a look at different add URL tools.

Google Search Console

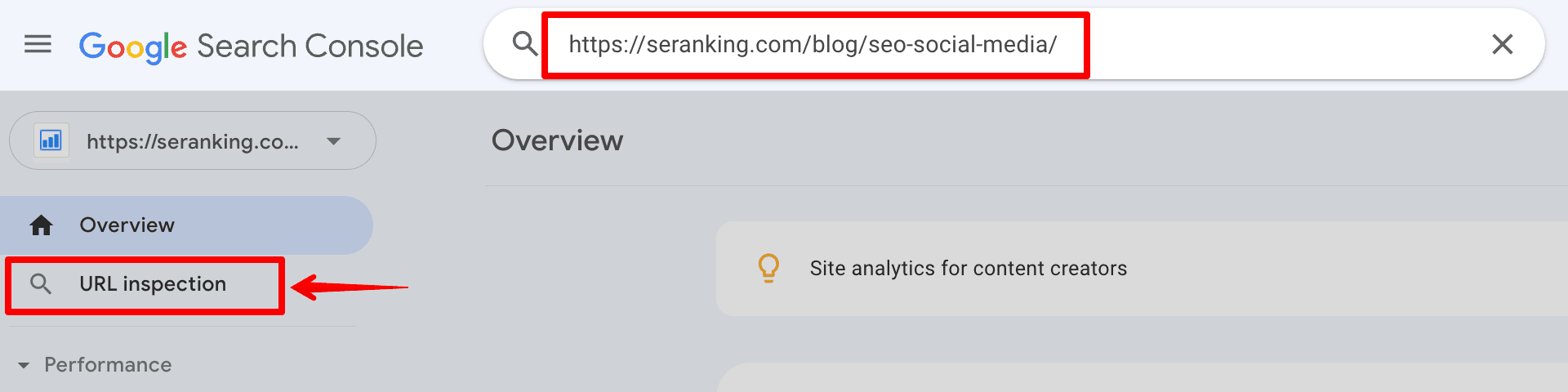

At the beginning of this chapter, I described how to add a sitemap with lots of website links. But if you need to add one or more links for indexing, you can use another GCS option. With the URL Inspection tool, you can request a crawl of individual URLs.

Go to your Google Search Console dashboard, click on the URL inspection section, and enter the desired page address in the line:

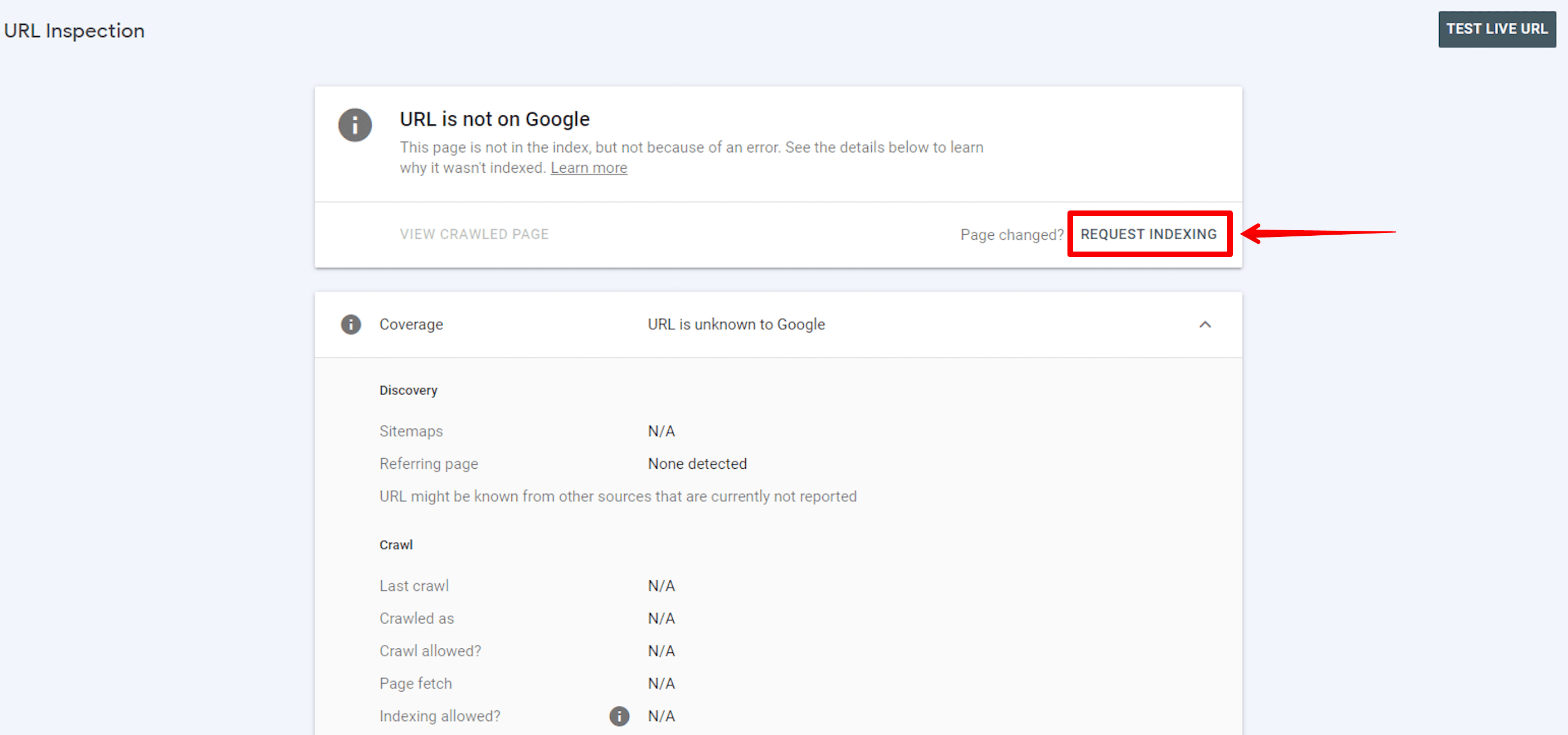

If a page has been created recently, it may not be indexed. Then you will receive a message about this, and you can request indexing of the URL. Just press the button:

All URLs with new or updated content can be requested for indexing this way via Google Search Console.

Ping services

Pinging is another way of alerting search engines and letting them know immediately about a new piece of content.

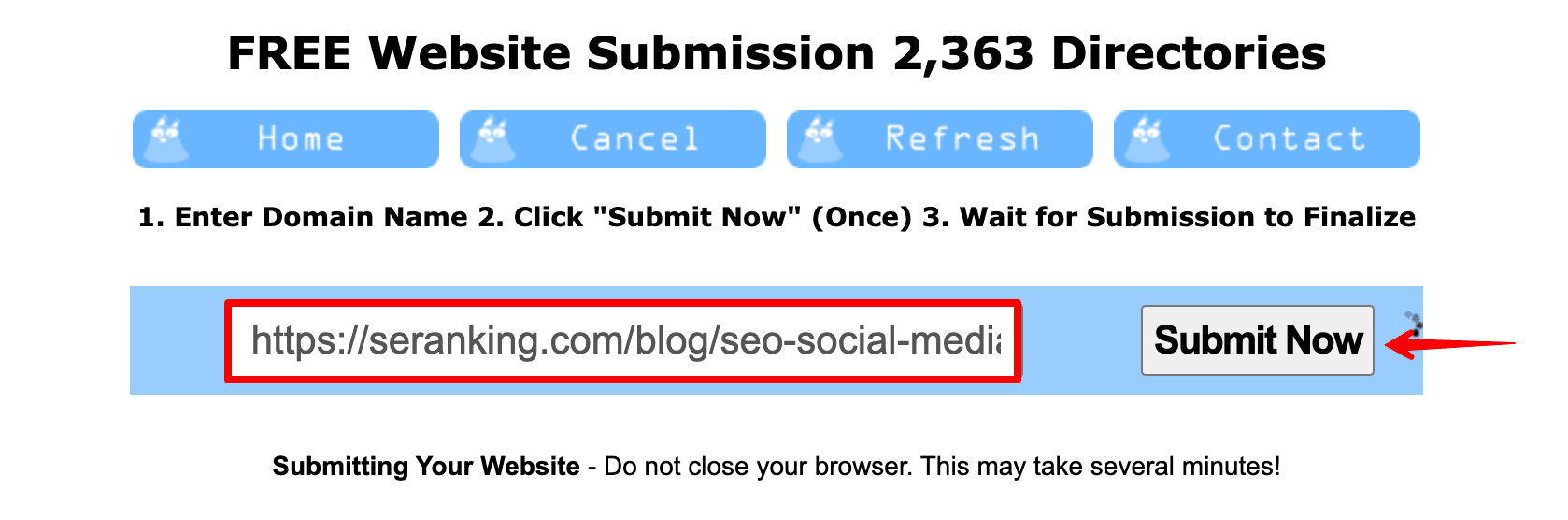

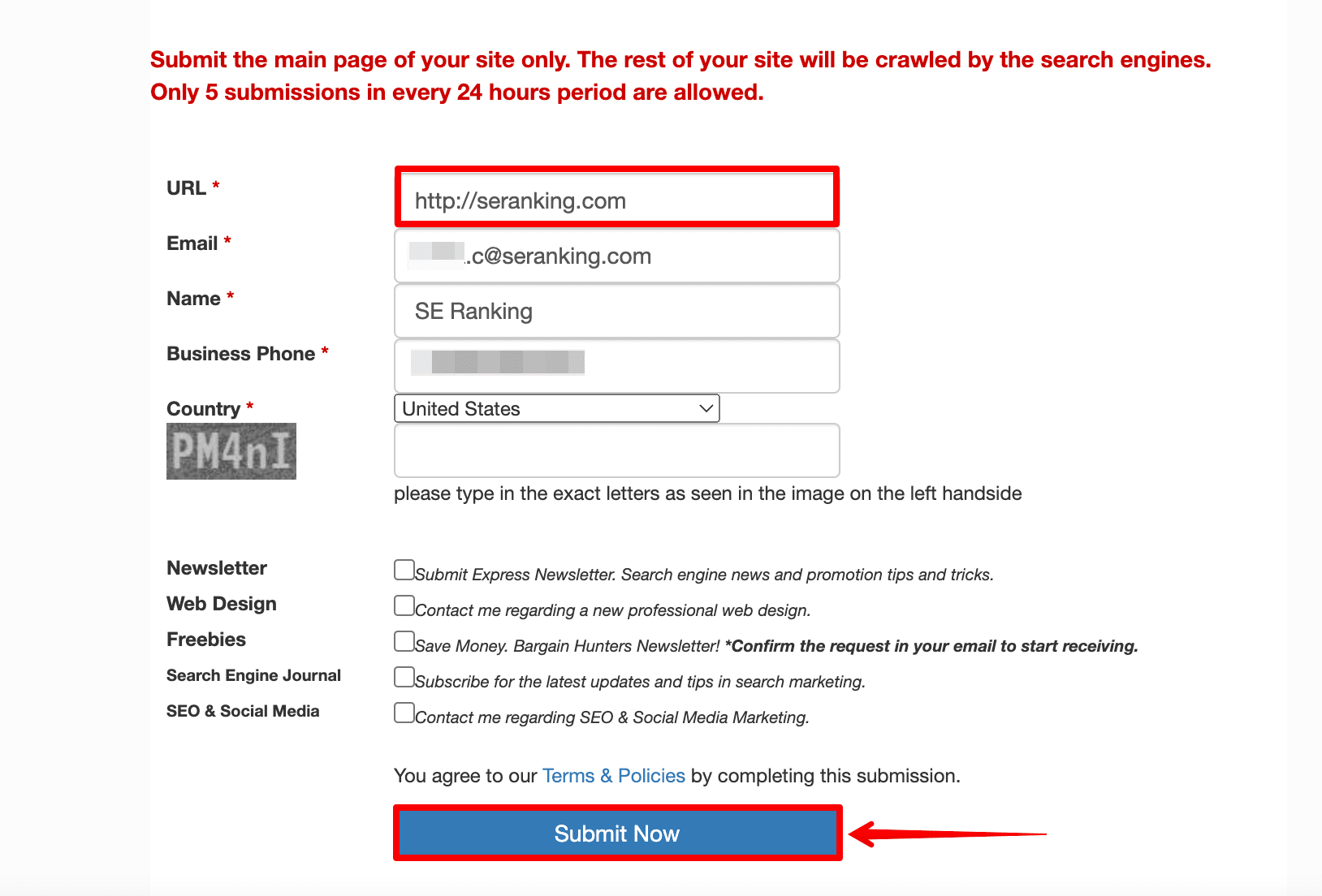

Usually, such submission services use Google API or ping tools—programs designed to speed up indexing of new pages and improve your SEO ranking by sending signals to search engines.

With their help, you can send pages to be indexed by dozens of different search engines in one go. Just complete a few simple steps:

Enter URL ▶️ Click Submit Now ▶️ Wait for Submission

Here are three of the most popular ping tools:

Keep in mind: requesting a crawl does not guarantee that inclusion in search results will happen instantly or even at all.

How to check your website indexing?

You have submitted your website pages for indexing. How do you know that the indexing was successful and the necessary pages have already been ranked? Let’s look at methods you can use to check your website indexing.

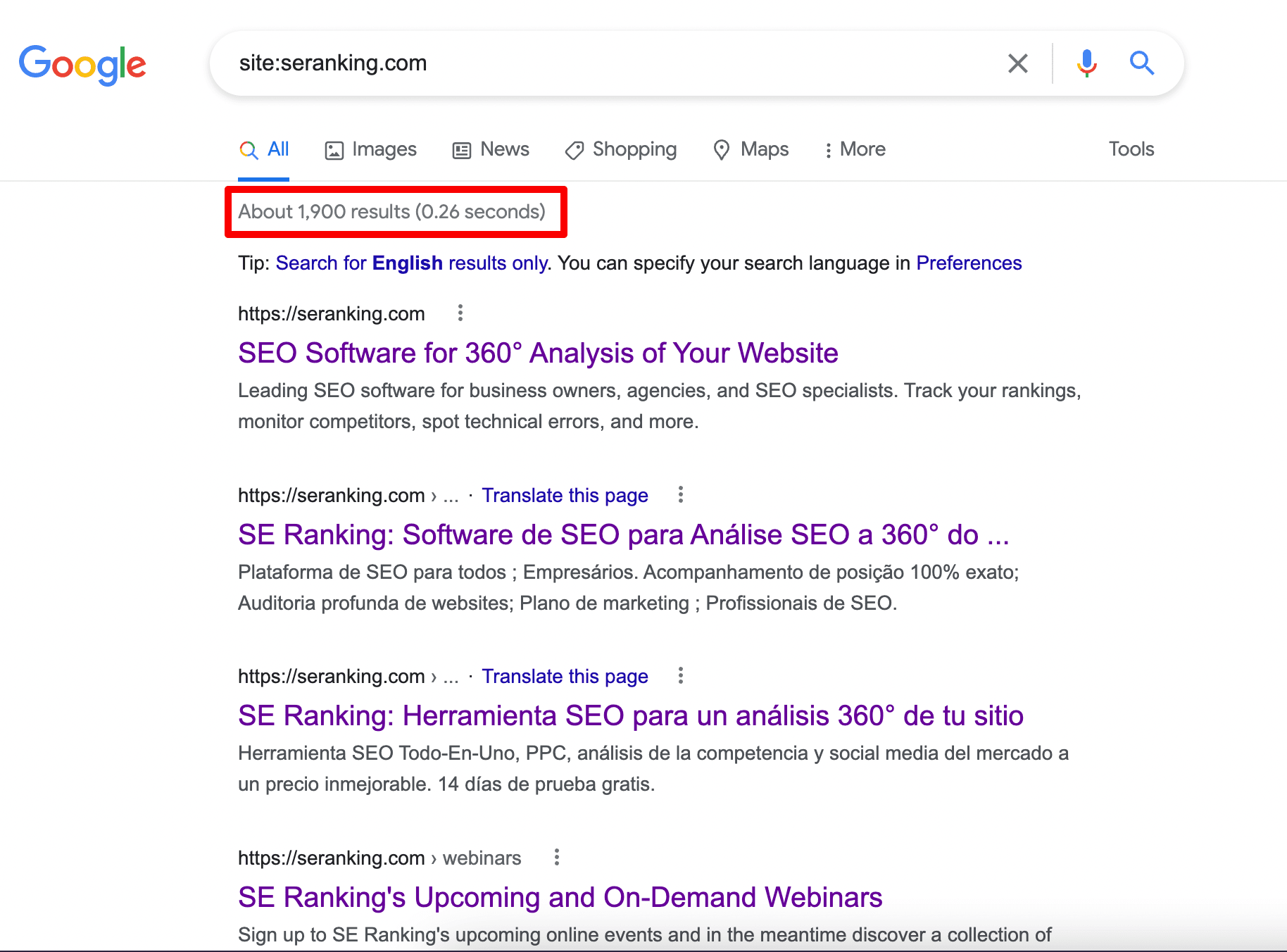

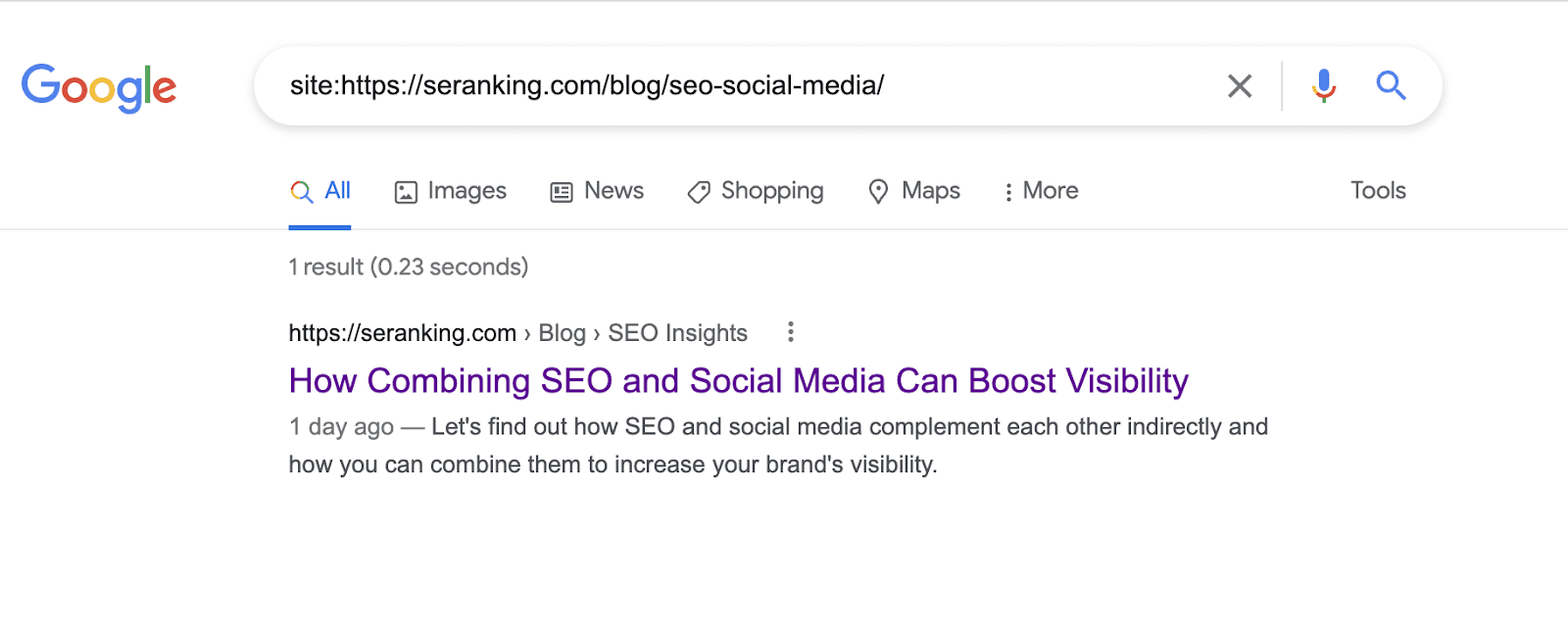

Use Google’s search operator site:

The easiest way to check the indexed URL is to search site:yourdomain.com in Google. This Google command allows limiting your search to the pages of a specified resource. If Google knows your website exists and has already crawled it, you’ll see a list of results similar to the screenshot below.

Here, you can see all indexed website pages.

You can also check the indexing of the page. Just enter the URL instead of the domain:

This is the easiest and fastest way to check indexing. However, the search operator gives you a very limited amount of data. Therefore, if you want to go deeper into the analysis of your website pages, you should pay close attention to the services described below.

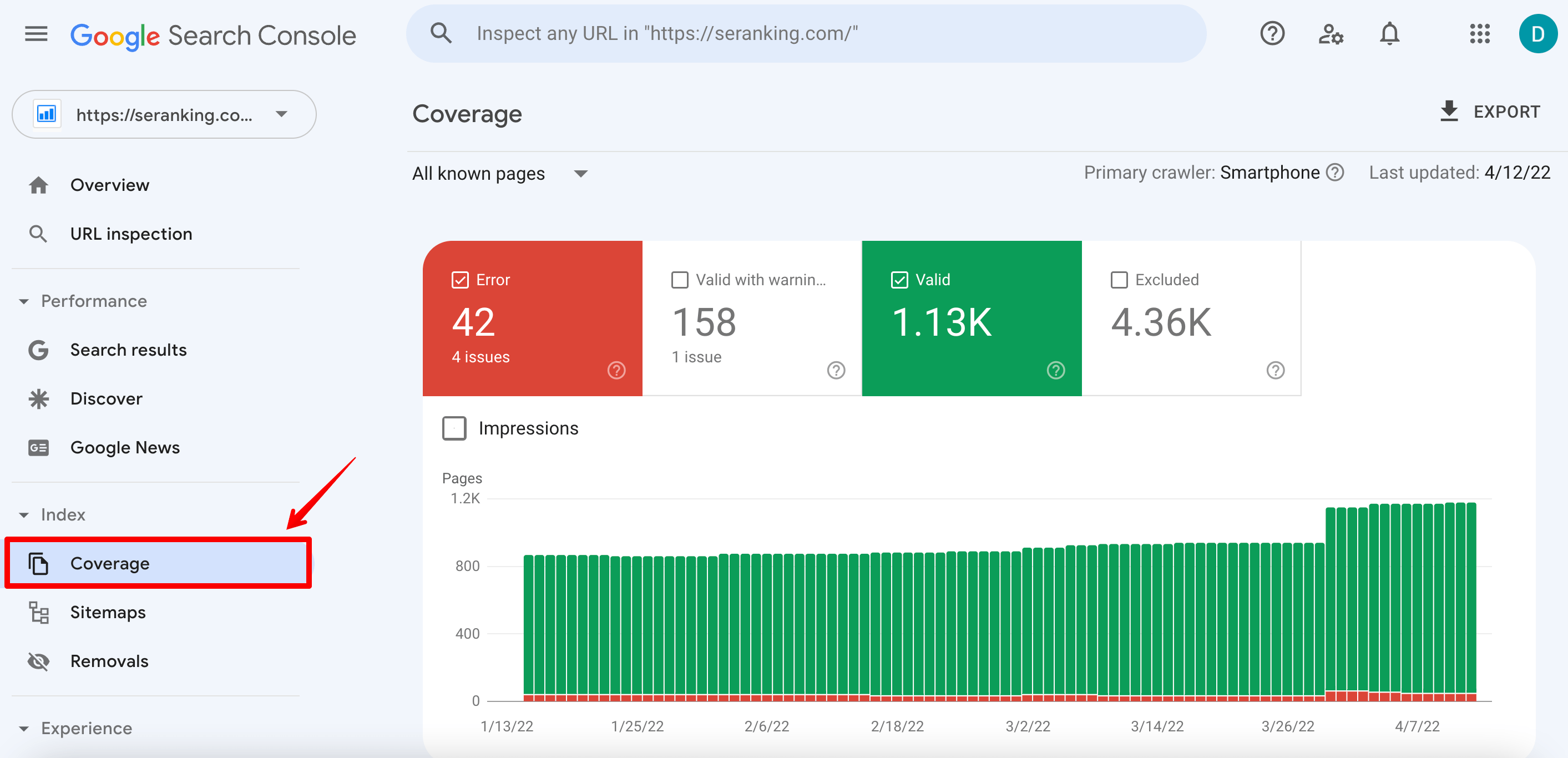

Analyze the Coverage report in GSC

Google Search Console allows you to monitor which of your website pages are indexed, which are not, and why. We’ll show you how to check this.

Firstly, click on the Index section and go to the Coverage report.

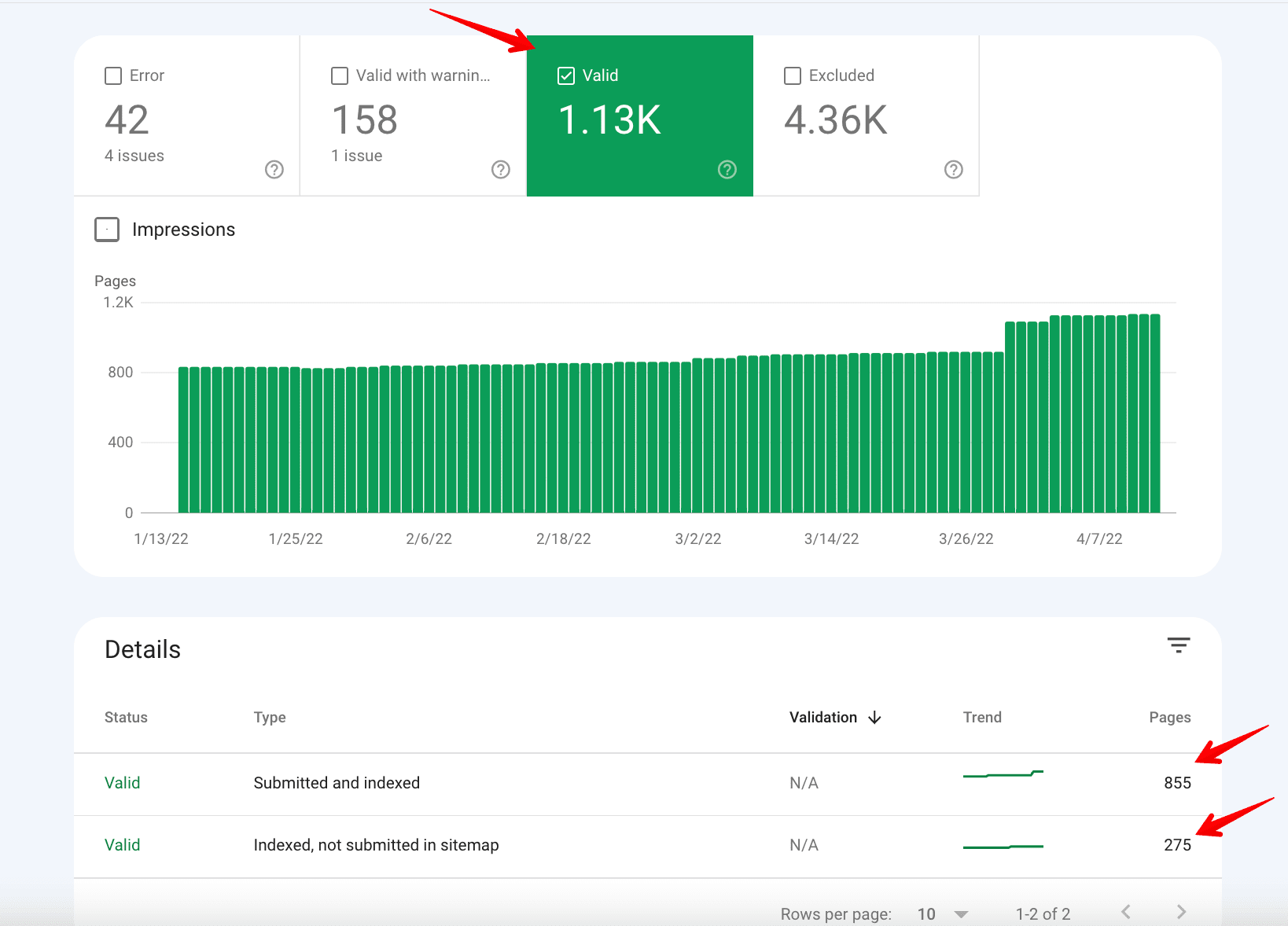

Then click on the Valid tab—here is information about all the indexed website pages.

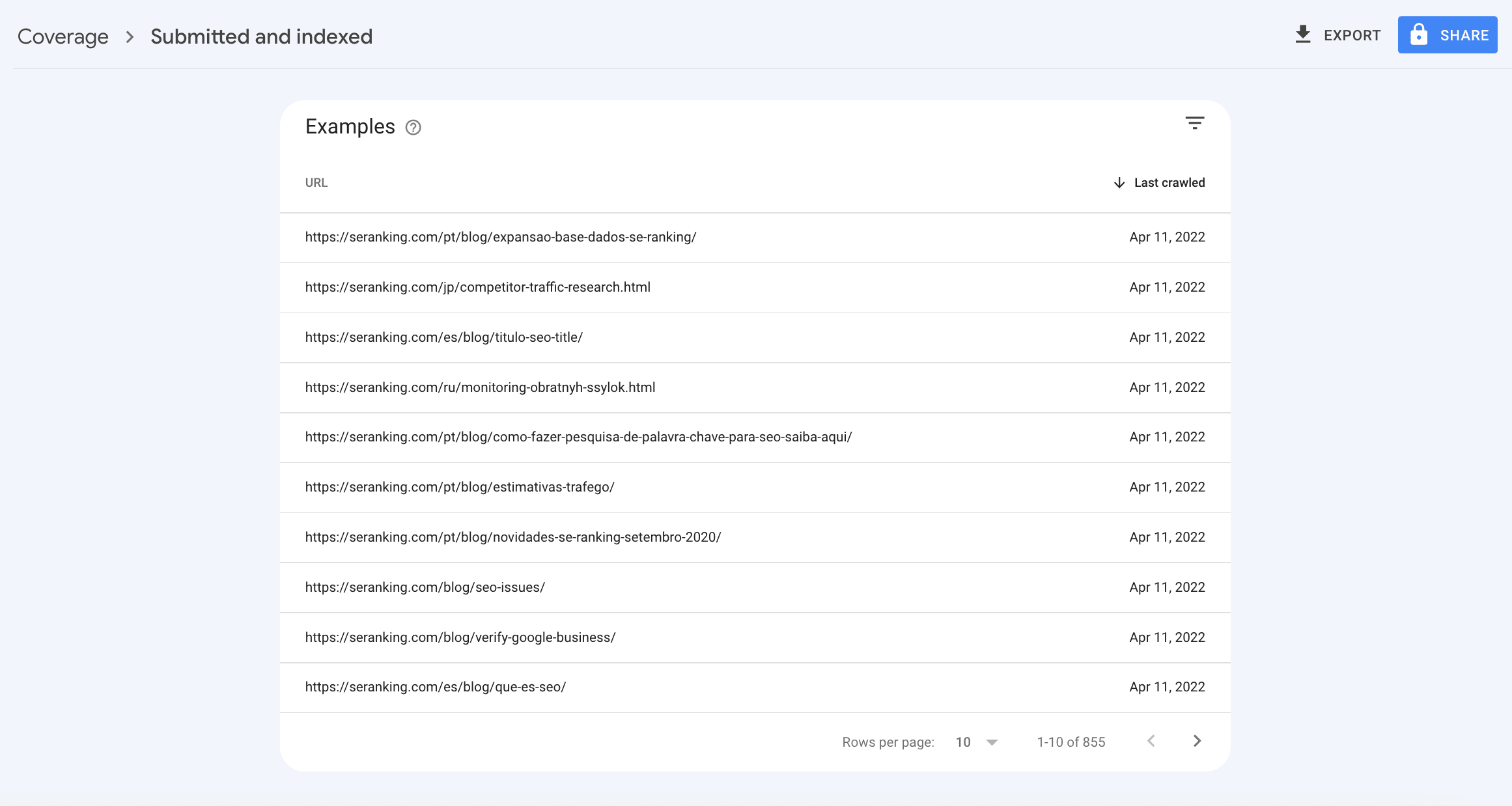

Scroll down. You’ll see the table with two groups of indexed pages: Submitted and indexed & Indexed, not submitted in sitemap. Click into the first row—here, you can learn more about submitted in sitemap and indexed pages. Besides, you may also find out when the page was last crawled by Google.

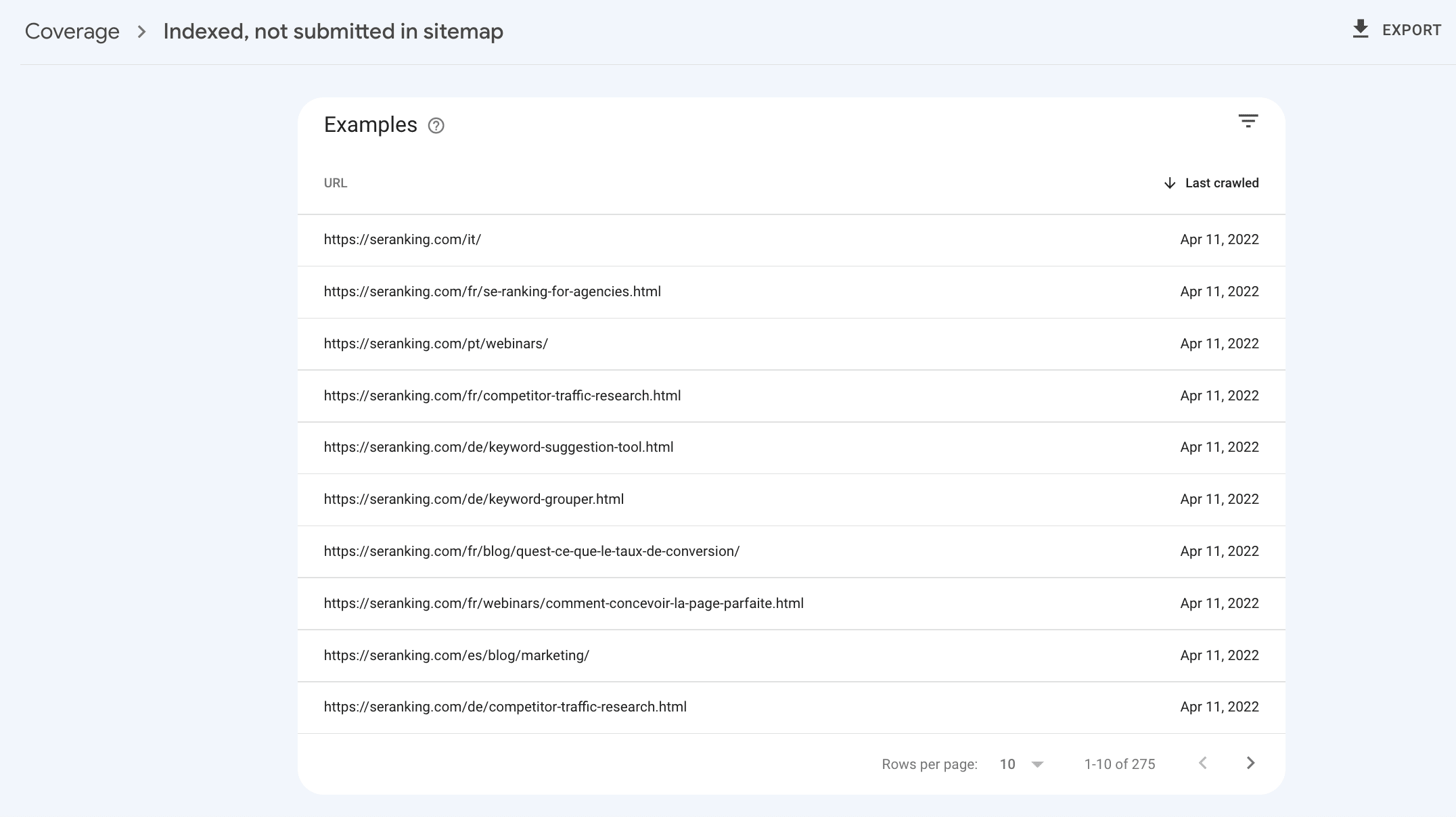

Then click into the second row—you’ll see indexed pages that were not submitted in the sitemap. You may want to add them to your sitemap, since Google believes these are high-quality pages.

Now, let’s move on to the next stage.

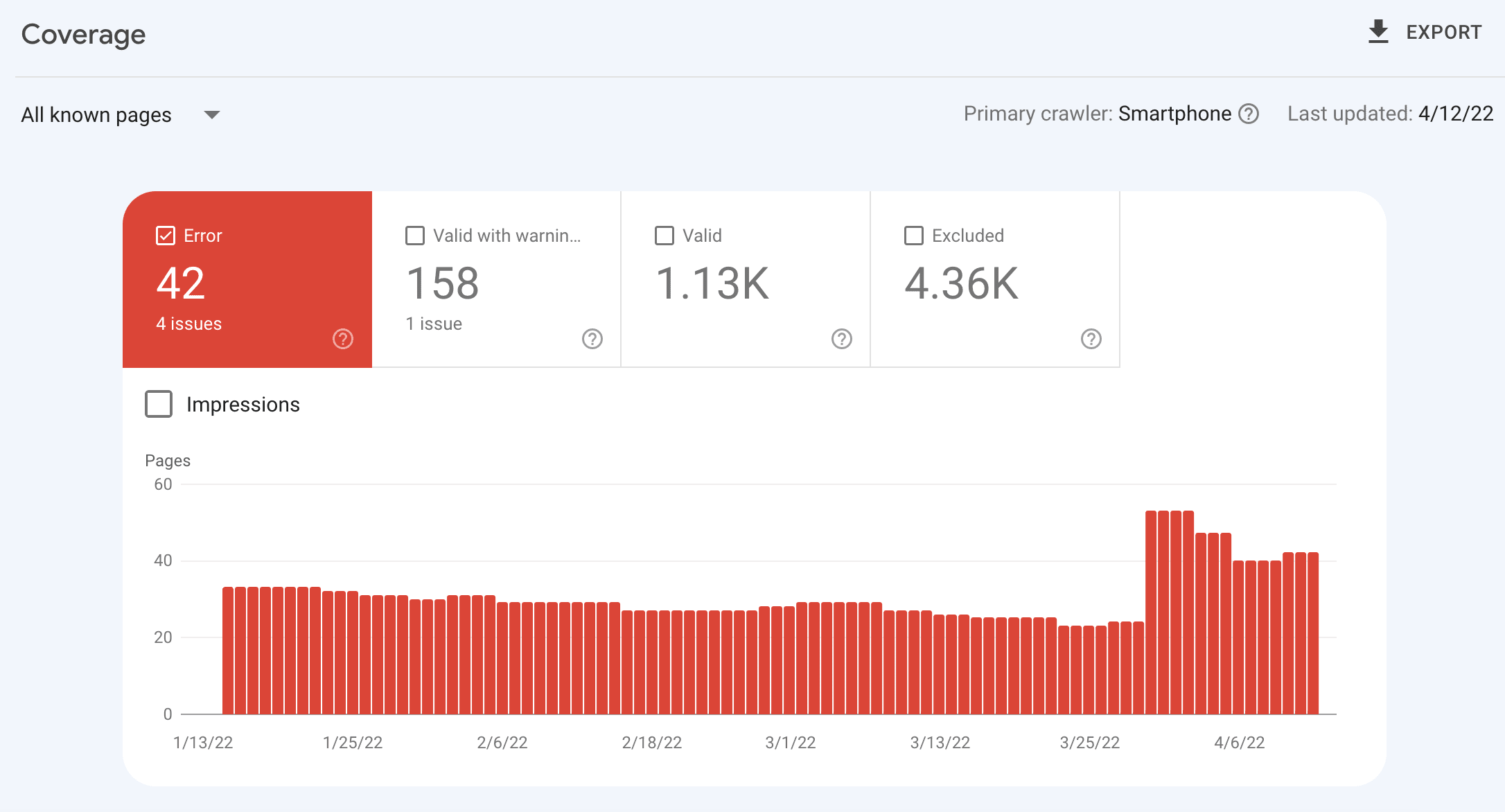

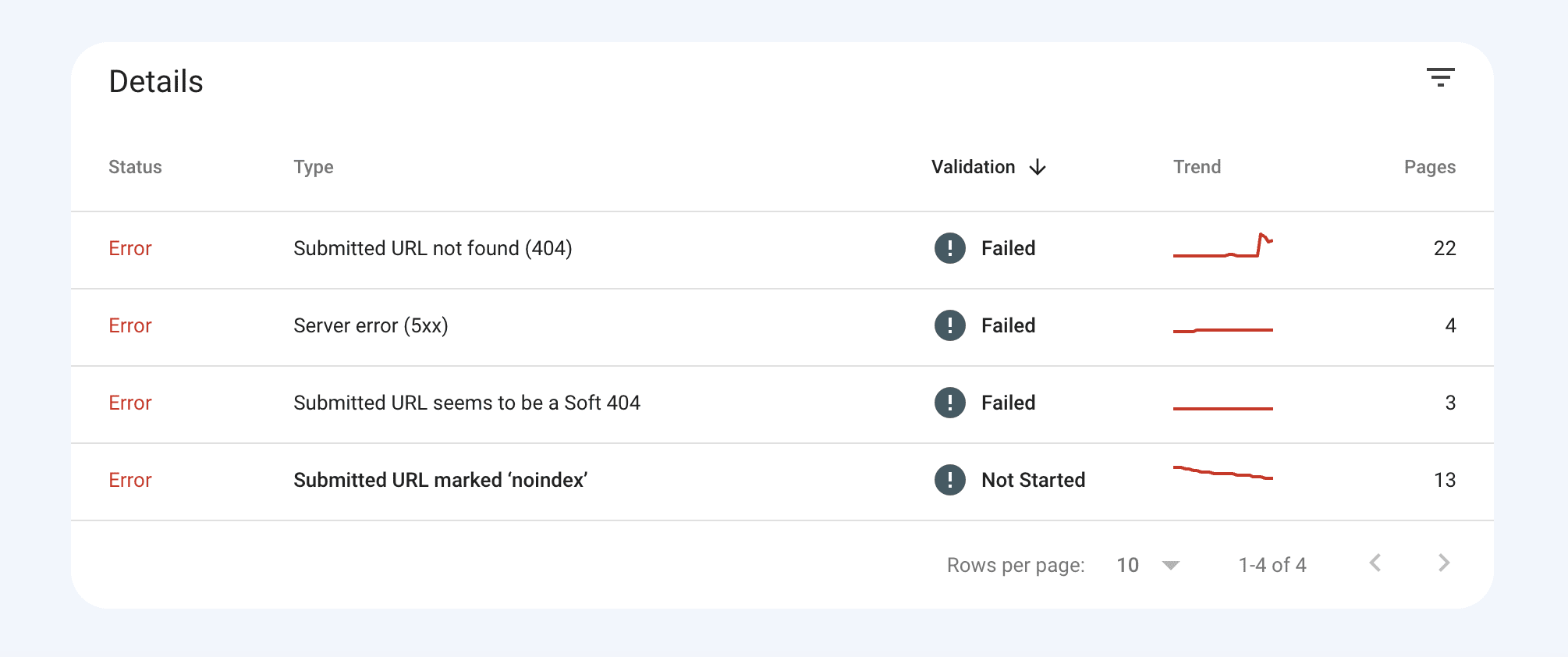

The Error tab shows pages that couldn’t be indexed for some reason.

Click into an error row in the table to see details, including how to fix it.

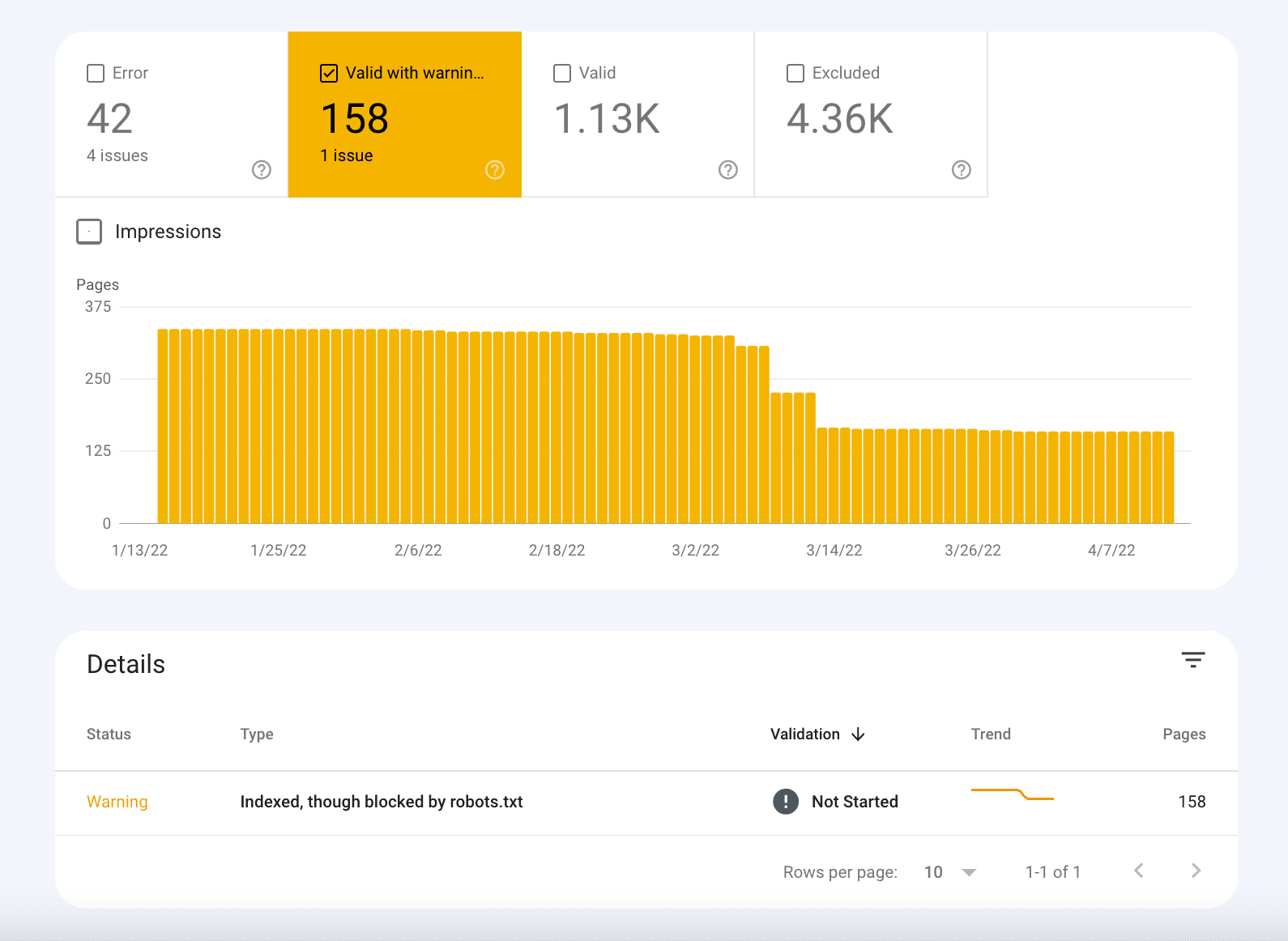

The Valid with warnings tab shows pages that have been indexed, but there are some issues that can be intentional on your part. Click into a warning row in the table to see details and try to fix issues. This will help you rank better.

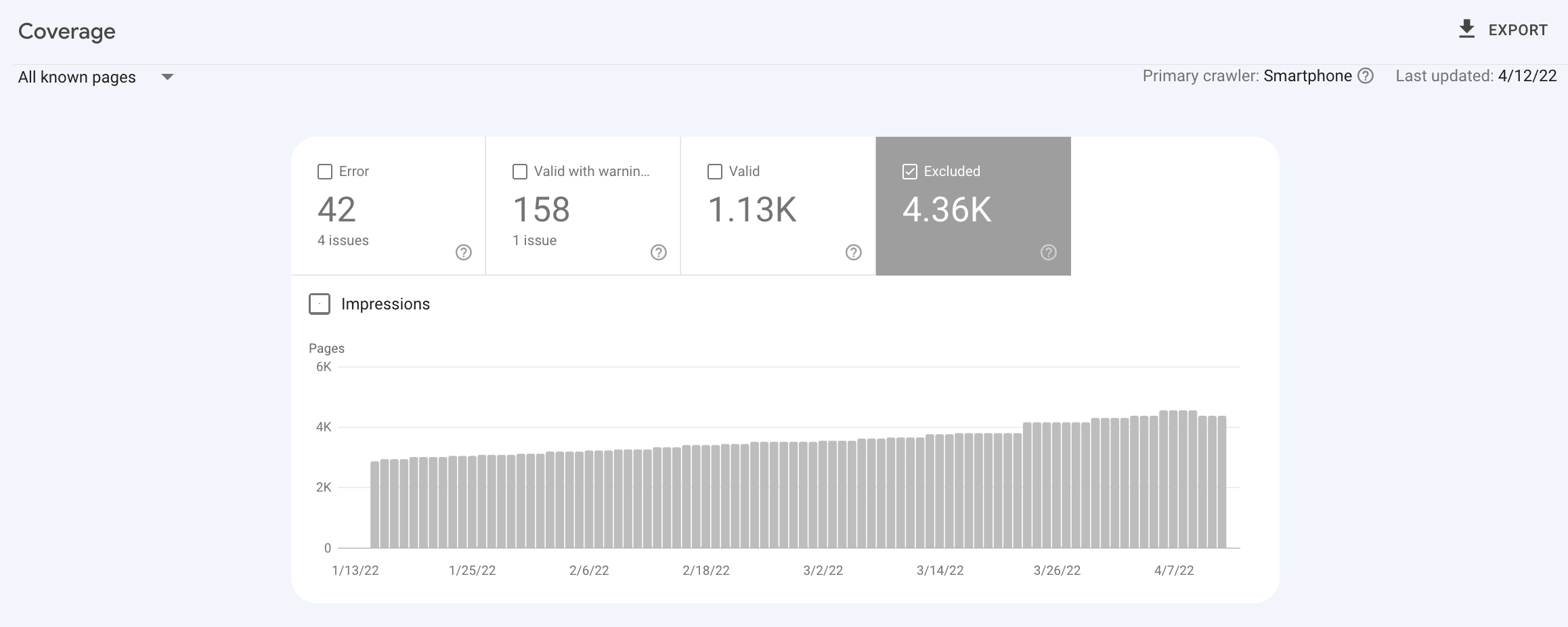

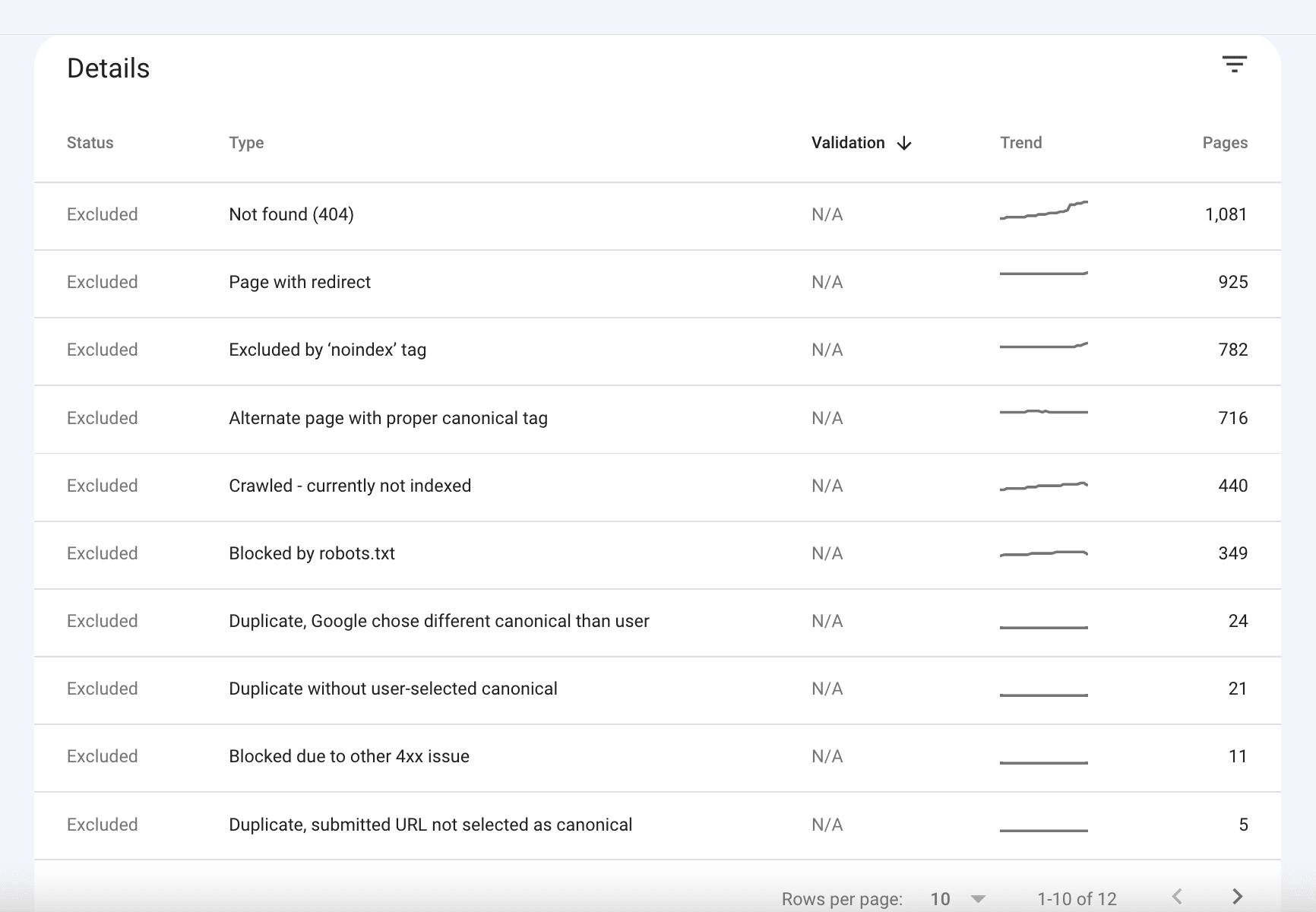

And the last tab is Excluded. These are pages that were not indexed.

Click into a row in the table to see details:

Look through all these pages carefully: you may find URLs that can be fixed so that Google will index them and start ranking. Pay attention to the error in front of each page and try to work on it, if necessary.

Use special tools

In addition to Google Search Console and search operator, you can also use other tools to check indexing. I will tell you about the simplest and most effective ones.

SE Ranking

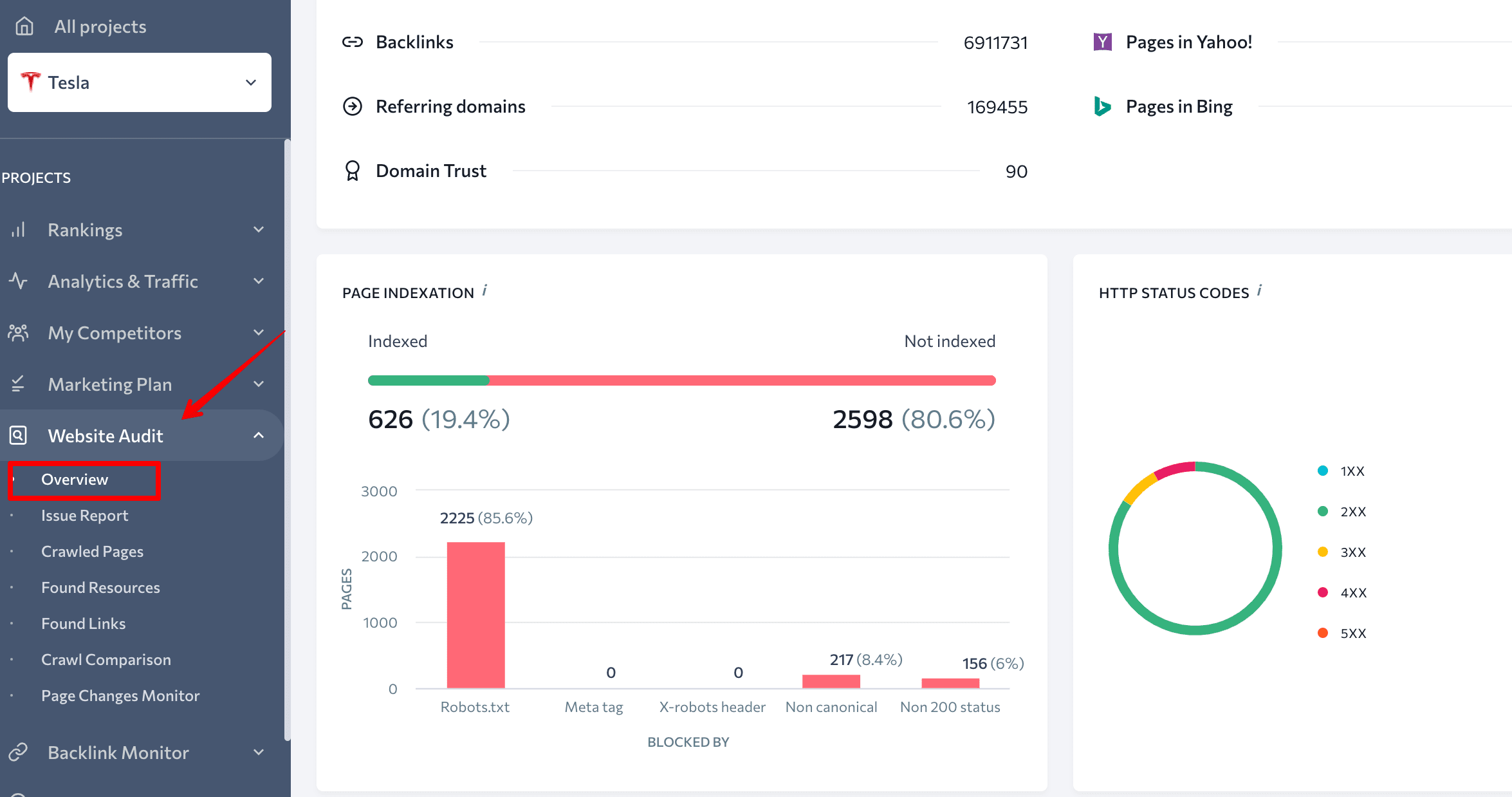

In SE Ranking’s Website Audit tool, you will find information about website indexing.

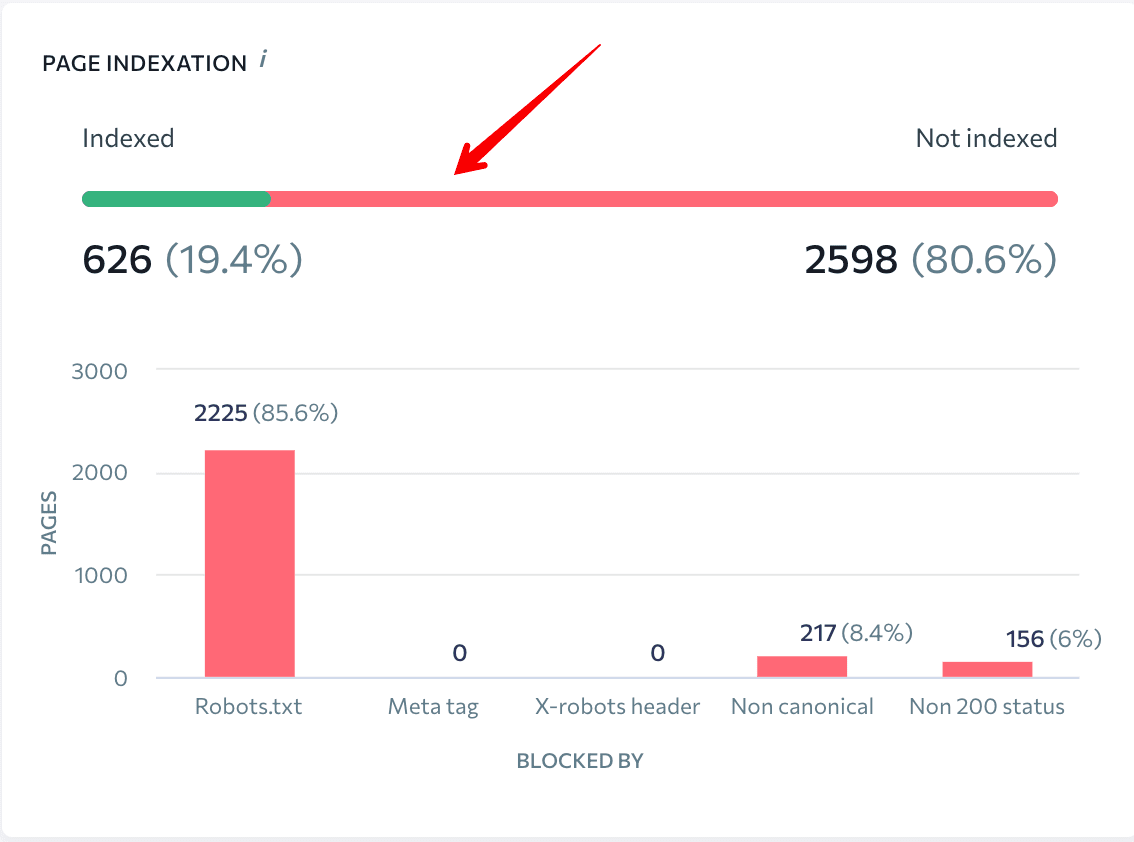

Go to the Overview and scroll to the Page Indexation block.

Here, you’ll see a graph of indexed and not indexed pages, their percentage ratio, and number. This dashboard also shows issues that won’t let search engines index pages of the website. You can view a detailed report by clicking on the graph.

By clicking on the green line, you’ll see the list of indexed pages and their parameters: status code, blocked by robots.txt, referring pages, x-robots-tag, title, description, etc.

And then click on the red line: you’ll see the same table, but with pages that were not indexed.

This extensive information will help you find and fix the issues so that you can be sure all important website pages are indexed.

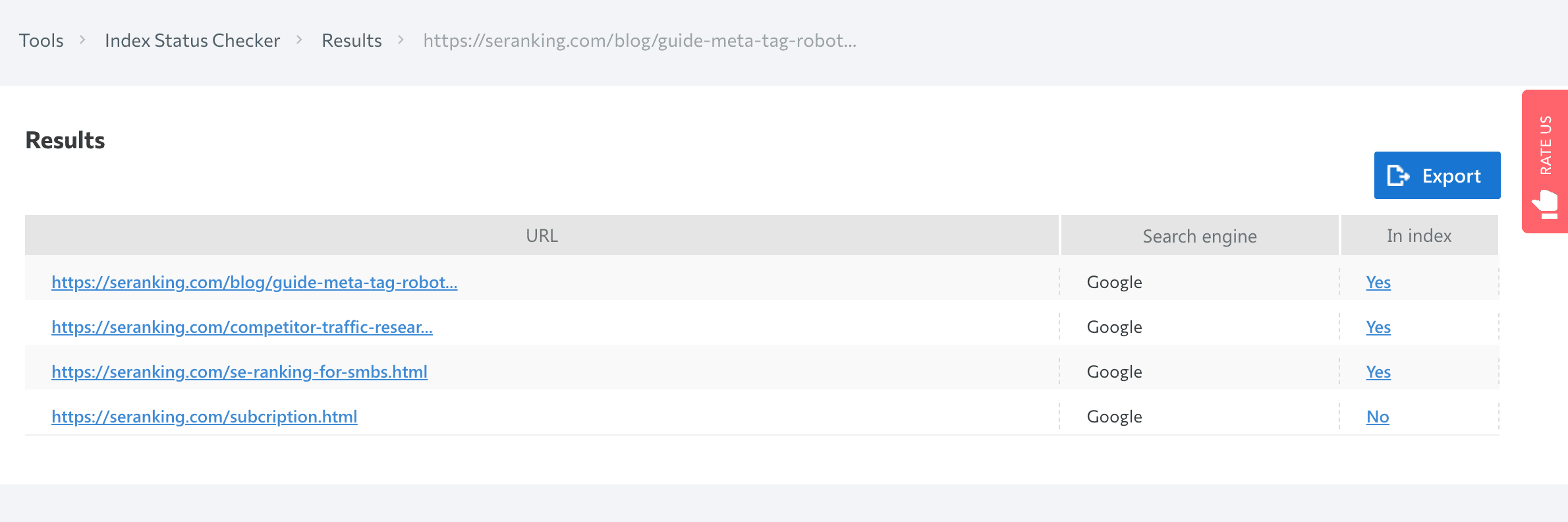

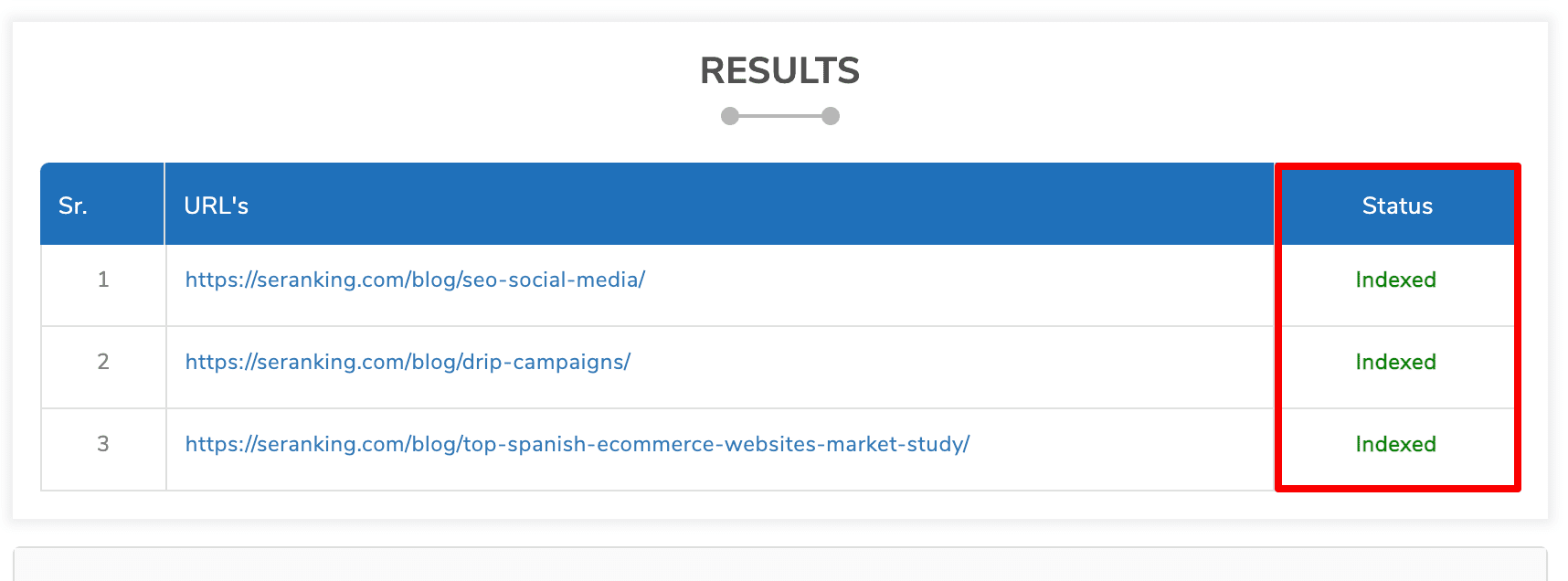

You can also check page indexing with SE Ranking’s Index Status Checker. Just choose the search engine and enter a URL list.

Prepostseo

Prepostseo is another tool that helps you check website indexing.

Just paste the website URL or list of URLs that you want to check and click on the Submit button. You’ll get a results table with two values against each URL:

- By clicking the Full website indexed page link, you will be redirected to a Google SERP where you can find a full list of indexed pages of that specific domain.

- By clicking the link Check current page only link, you will be redirected to a results page where you can check if that exact URL is listed in Google or not.

With this website index checker, you can check 1,000 pages at once.

Small SEO Tools

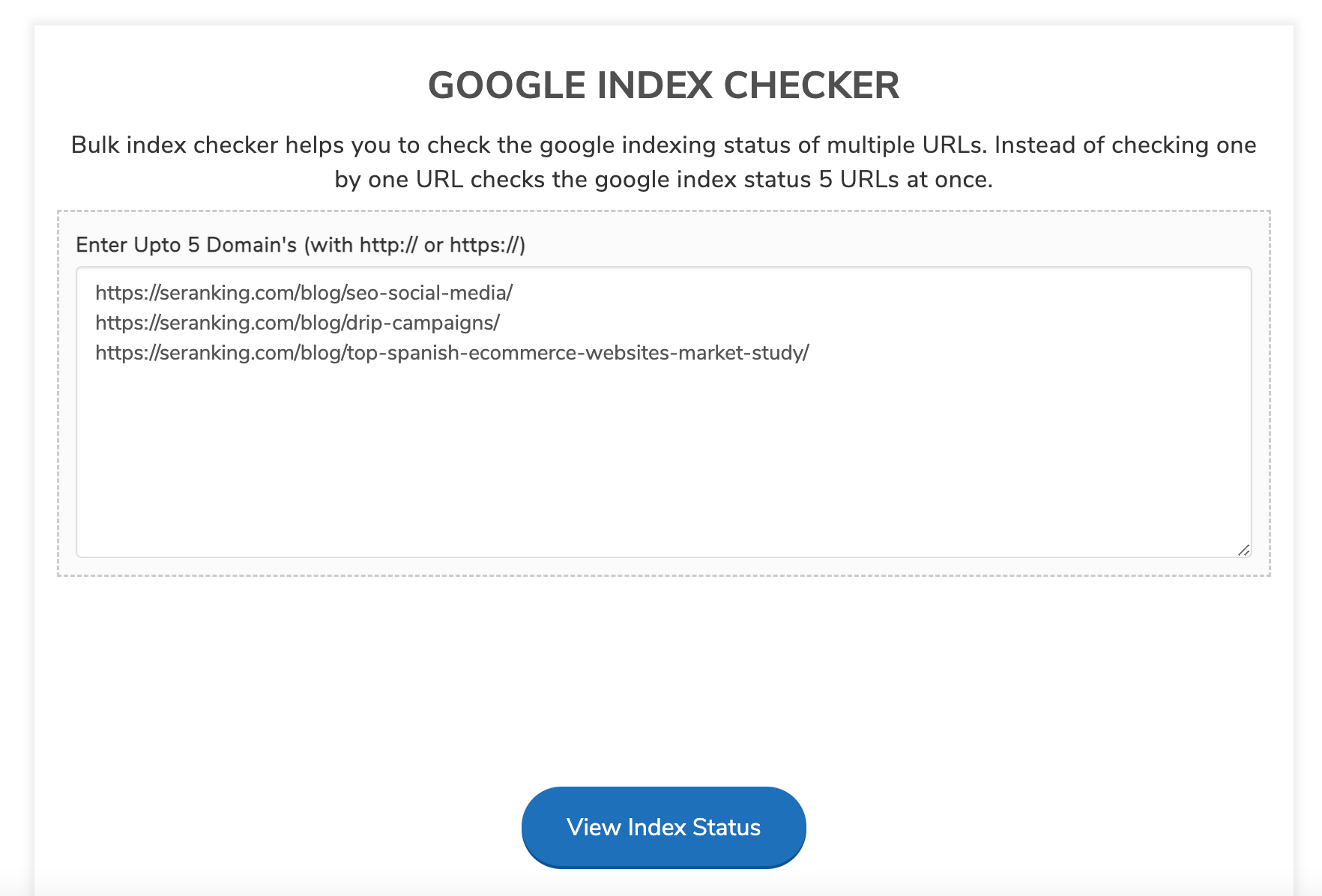

This index checker by Small SEO Tools also helps you to check the Google indexing status of multiple URLs. Simply enter the URLs and click on the Check button, and the tool will process your request.

You’ll see the table with the info about indexing of each URL.

Here, you can check the Google index status of 5 URLs at once.

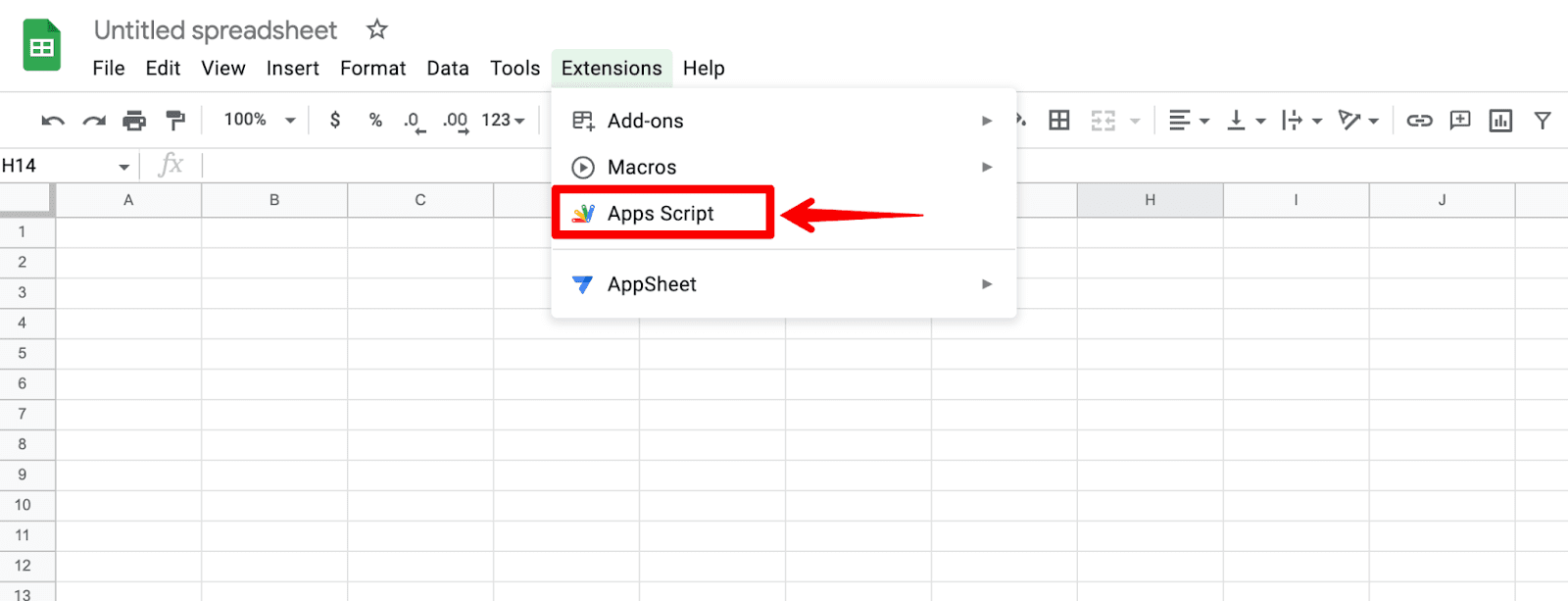

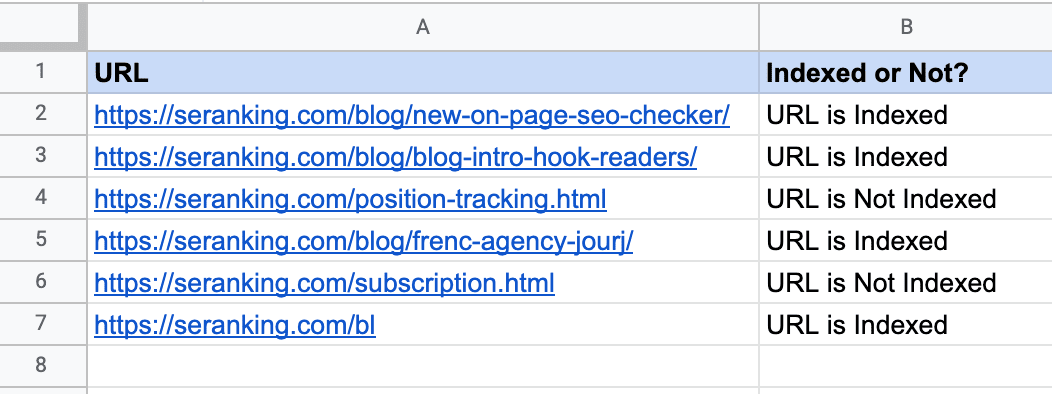

Check indexing in Google Sheets

You can scrape a lot of things with Google Sheets—for example, you can use the script described below.

It can search for site:domain.com in Google to check if there are indexed results for the input URL. Let’s see step by step how it works.

Step 1. Open Google Sheets ▶️ Go to Extensions ▶️ Click on Apps Script

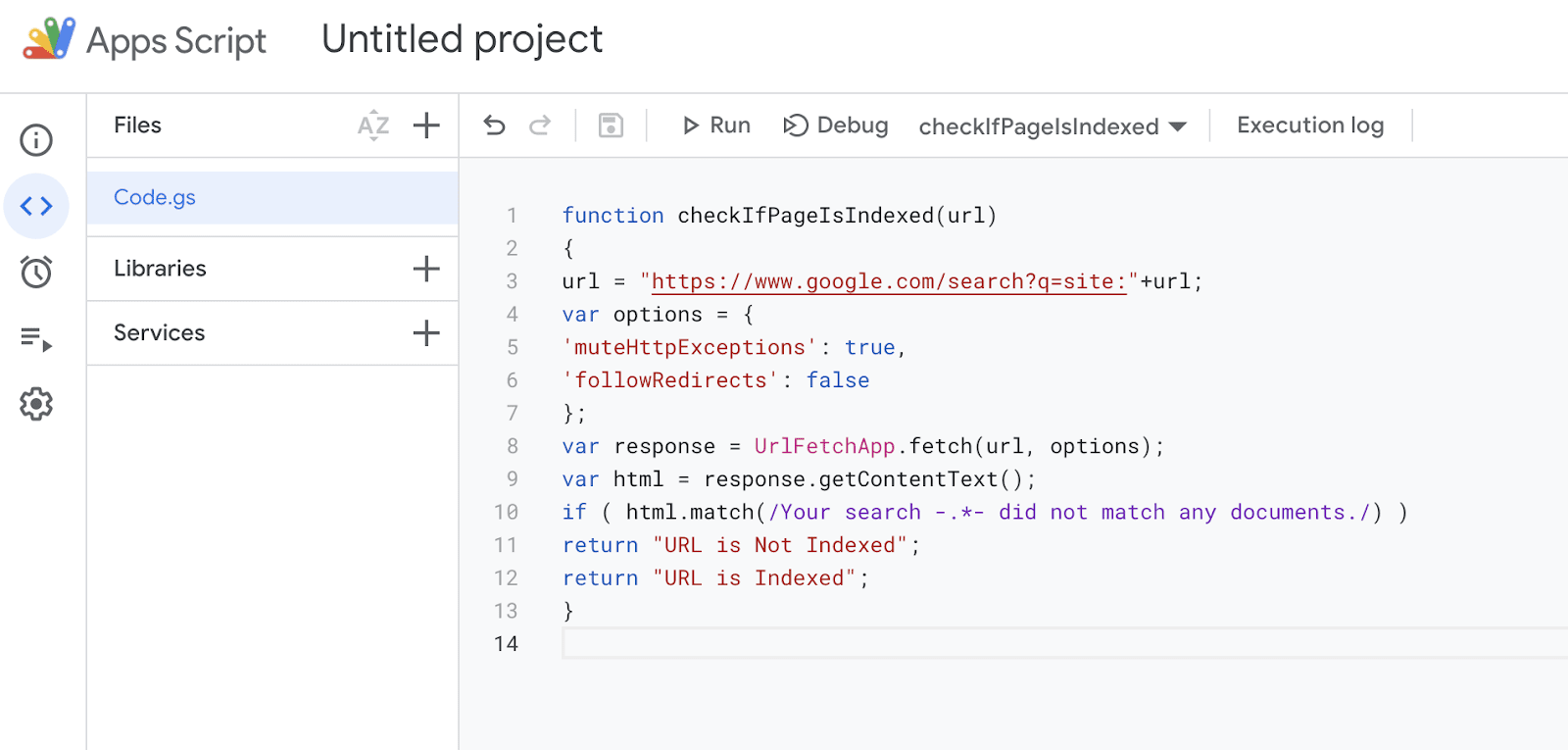

Step 2. Copy and paste this code into the Script editor:

function checkIfPageIsIndexed(url)

{

url = "https://www.google.com/search?q=site:"+url;

var options = {

'muteHttpExceptions': true,

'followRedirects': false

};

var response = UrlFetchApp.fetch(url, options);

var html = response.getContentText();

if ( html.match(/Your search -.*- did not match any documents./) )

return "URL is Not Indexed";

return "URL is Indexed";

}

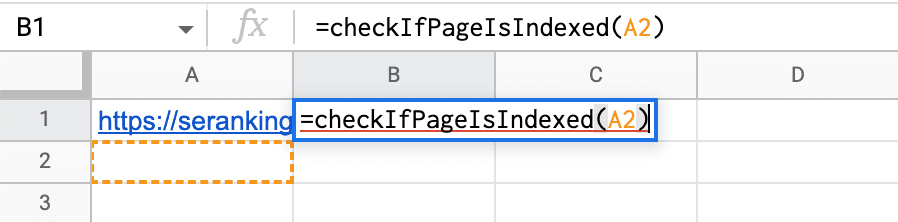

Step 3. Paste necessary links into the table and run the function in Google Sheets.

To do so, copy the name of the function:

checkIfPageIsIndexed(URL)

Then insert =checkIfPageIsIndexed(url) next to the first link. The value (url) corresponds to the cell, where you pasted the URL. In the screenshot, it’s A2. Repeat this step with all the cells of the column.

As a result, you will see, which pages are indexed and which are not.

The advantage of this method is that you can check a huge list of pages quickly enough.

What are the specifics of websites indexing with different technologies?

We’ve puzzled out how Google indexes websites, how to submit pages for indexing, and how to check whether they appear in SERP. Now, let’s talk about an equally important thing: how web development technology affects the indexing of website content.

The more you know about indexing aspects of websites with different technologies, the higher your chances of having all your pages successfully indexed. So, let’s get down to different technologies and their indexing.

Flash content

Flash started as a simple piece of animation software, but in the years that followed, it has shaped the web as we know it today. Flash was used to make games and indeed entire websites, but today, Flash is quite dead.

Over the 20 years of its development, the technology has had a lot of shortcomings, including a high CPU load, flash player errors, and indexing issues. Flash is cumbersome, consumes a huge amount of system resources, and has a devastating impact on mobile device battery life.

In 2019, Google stopped indexing flash content, making a statement about the end of an era.

Not surprisingly, search engines recommend not using Flash on websites. But if your site is designed using this technology, create a text version of the site. It will be useful for users who haven’t ever installed Flash, or installed outdated Flash programs, plus mobile device users (such devices do not display flash content).

JavaScript

Nowadays, it’s increasingly common to see JavaScript websites with dynamic content—they load quickly and are user-friendly. Before JS started dominating web development, search engines were crawling only the text-based content—HTML. Over time, JS was becoming more and more popular, and Google started crawling and indexing such content better.

In 2018, John Mueller said that it took a few days or even weeks for the page to get rendered. Therefore, JavaScript websites could not expect to have their pages indexed fast. Over the past years, Google has improved its ability to index JavaScript. In 2019, Google claimed they needed a median time of 5 seconds for JS-based pages to go from crawler to renderer.

A study conducted in late 2019 found that Google is indeed getting faster at indexing JavaScript-rendered content. 60% of JavaScript content is indexed within 24 hours of indexing the HTML. However, that still leaves 40% of content that can take longer.

To see what’s hidden within the JavaScript that normally looks like a single link to the JS file, Googlebot needs to render it. Only after this step can Google see all the content in HTML tags and scan it fast.

JavaScript rendering is a very resource-demanding thing. There’s a delay in how Google processes JavaScript on pages, and before rendering is complete, Google will struggle to see all JS content that is loaded on the client side. With plenty of information missing, the search engine will crawl such a page but won’t be able to understand if the content is high-quality and responds to the user intent.

Keep in mind that page sections injected with JavaScript may contain internal links. And if Google fails to render JavaScript, it can’t follow the links. As a result, a search engine can’t index such pages unless they are linked to other pages or the sitemap.

To improve JS website indexing, read our guide.

There are a lot of JS-based technologies. Below, we’ll dive into the most popular ones.

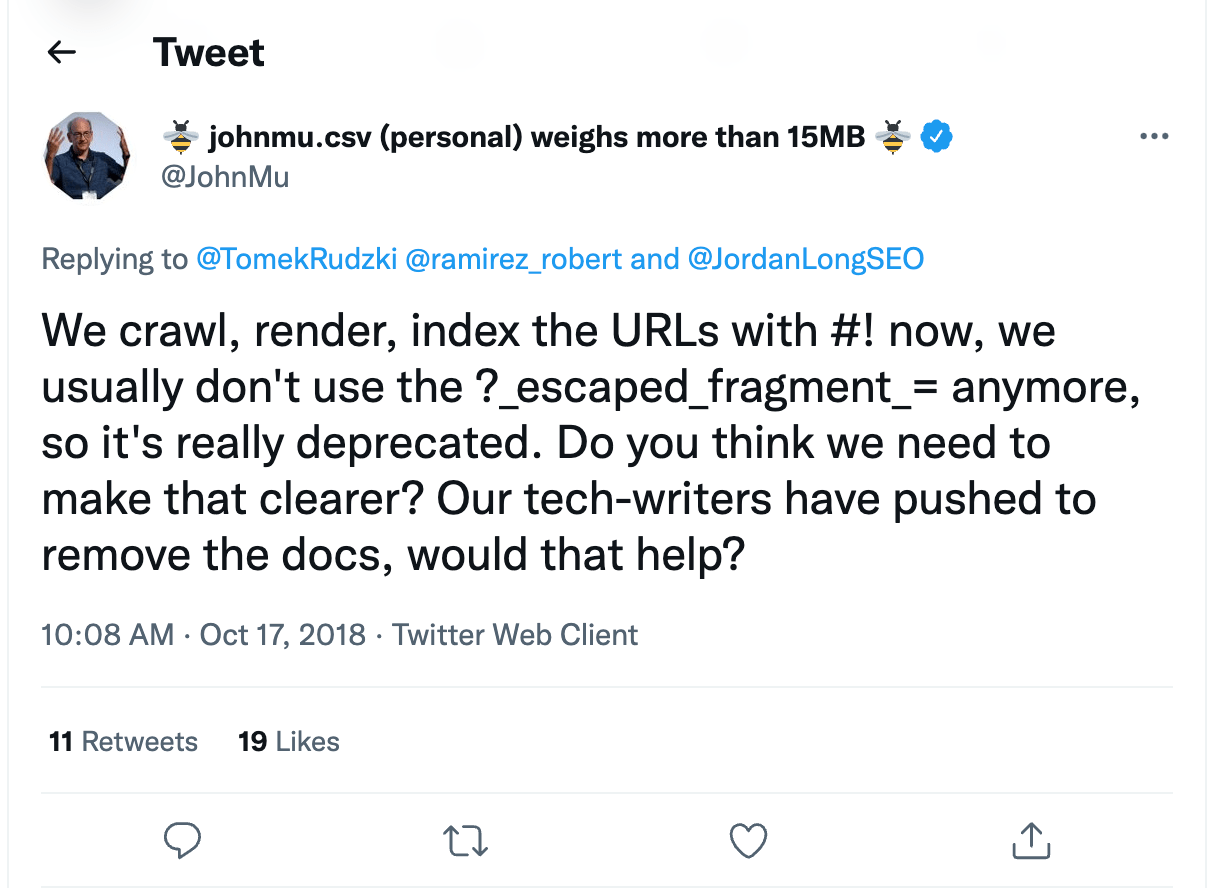

AJAX

AJAX allows pages to update serially by exchanging a small amount of data with the server. One of the signature features of the websites using AJAX is that content is loaded by one continuous script, without division into pages with URLs. As a result, the website has pages with hashtag # in the URL.

Historically, such pages were not indexed by search engines. Instead of scanning the https://mywebsite.com/#example URL, the crawler went to https://mywebsite.com/ and didn’t scan the URL with #. As a result, crawlers simply couldn’t scan all the website content.

From 2019, websites with AJAX are rendered, crawled, and indexed directly by Google, which means that bots scan and process the #! URLs, mimicking user behavior.

Therefore, webmasters no longer need to create the HTML version of every page. But it’s important to check if your robots.txt allows scanning AJAX scripts. If they are disallowed, be sure to open them for search indexing. To do this, add the following rules to the robots.txt:

User-agent: Googlebot Allow: /*.js Allow: /*.css Allow: /*.jpg Allow: /*.gif Allow: /*.png

SPA

Single-page application—or SPA—is a relatively new trend of incorporating JavaScript into websites. Unlike traditional websites that load HTML, CSS, JS by requesting each from the server when it’s needed, SPAs require just one initial loading and don’t bother the server after that, leaving all the processing to the browser. It may sound great—as a result, such websites load faster. But this technology might have a negative impact on SEO.

While scanning, crawlers don’t get enough page content; they don’t understand that the content is being loaded dynamically. As a result, search engines see an empty page yet to be filled.

Moreover, with SPA, you also lose the traditional logic behind the 404 error page and other non-200 server status codes. As content is rendered by the browser, the server returns a 200 HTTP status code to every request, and search engines can’t tell if some pages are not valid for indexing.

If you want to learn how to optimize single-page applications to improve their indexing, take a look at our comprehensive blog post about SPA.

Frameworks

JavaScript frameworks are used to develop dynamic website interaction. Websites built with React, Angular, Vue, and other JavaScript frameworks are all set to client-side rendering by default. Due to this, frameworks are potentially riddled with SEO challenges:

- Google crawlers can’t actually see what’s on the page. As I said before, search engines find it difficult to index any content that requires a click to load.

- Speed is one of the biggest hurdles. Google crawls pages un-cached, so those cumbersome first loads can be problematic.

- Client-side code adds increased complexity to the finalized DOM, which means more CPU resources are required by both search crawlers and client devices. This is one of the most significant reasons why a complex JS framework would not be preferred.

How to restrict site indexing

There are some pages you don’t want Google or other search engines to index. Not all pages should rank and be included in search results.

What content is most often restricted?

- Internal and service files: those that should be seen only by the site administrator or webmaster, for example, a folder with user data specified during registration: /wp-login.php; /wp-register.php.

- Pages that are not suitable for display in search results or for the first acquaintance of the user with the resource: thank you pages, registration forms, etc.

- Pages with personal information: contact information that visitors leave during orders and registration, as well as payment card numbers;

- Files of a certain type, such as pdf documents.

- Duplicate content: for example, a page you’re doing an A/B test for.

So, you can block information that has no value to the user and does not affect the

site’s ranking, as well as confidential data from being indexed.

You can solve two problems with it:

- Reduce the likelihood of certain pages being crawled, including indexing and appearing in search results.

- Save crawling budget—a limited number of URLs per site that a robot can crawl.

Let’s see how you can restrict website content.

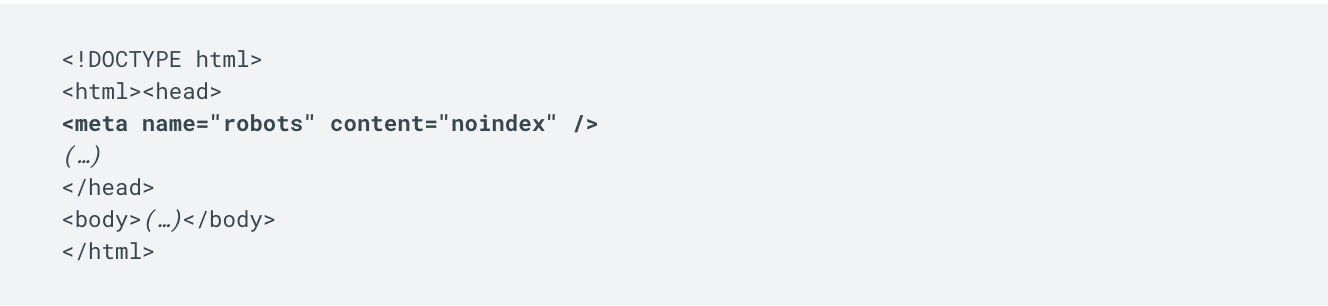

Robots meta tag

Meta robots is a tag where commands for search bots are added. They affect the indexing of the page and the display of its elements in search results. The tag is placed in the <head> of the web document to instruct the robot before it starts crawling the page.

Meta robot is a more reliable way to manage indexing, unlike robots.txt, which works only as a recommendation for the crawler. With the help of a meta robot, you can specify commands (directives) for the robot directly in the page code. It should be added to all pages that should not be indexed.

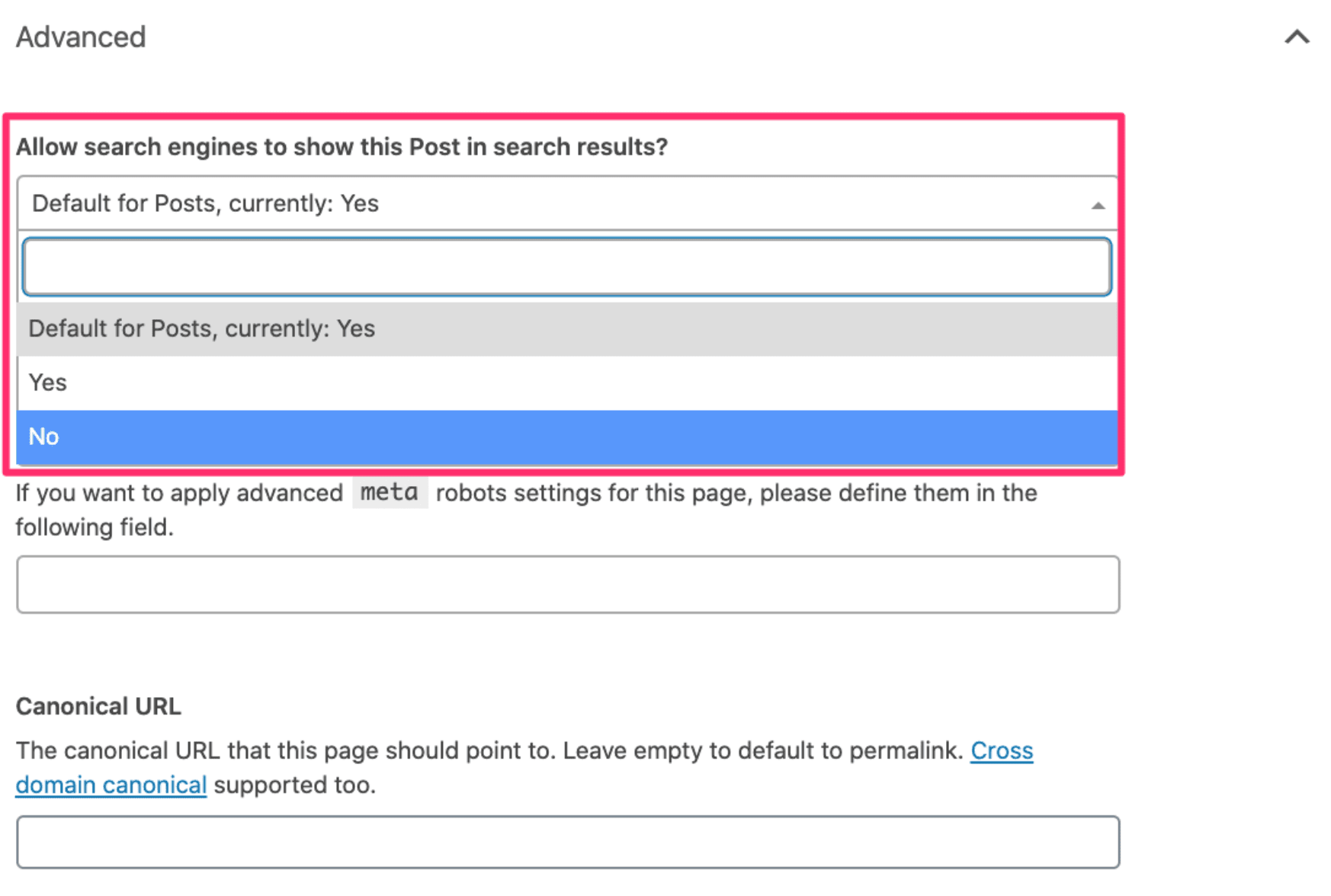

How to add meta tag robots to your website?

To prevent the page from indexing using the robots meta tag, you need to make changes to the page code. This can be done manually and through the admin panel.

To implement a meta tag manually, you need to enter the hosting control panel and find the desired page in the root directory of the site.

Just add the robots meta tag to the <head> section, and save it.

You can also add a meta tag through the site admin panel. If a special plugin is connected to the site’s content management system (CMS), then just go to the page settings and tick the necessary checkboxes to add the noindex and nofollow commands.

Now, let’s take a closer look at meta robots feature and how to apply it manually.

Meta tags have two parameters: name and content.

- In name, you define which search robot you are addressing

In content, you add a specific command

<meta name="robots" content="noindex" />

If robots is specified as the name attribute, this means the command is relevant for all search robots.

If you need to give a command to a specific bot, instead of robots, indicate its name, for example:

<meta name = "Googlebot-News" content = content="noindex" /> or <meta name = "googlebot" content = content="noindex" />

Now, let’s look at the content attribute. It contains the command that the robot must execute. Noindex is used to prohibit indexing of the page and its content. As a result, the robot won’t index the page.

Server-side

You can also restrict indexing of website content server-side. To do so, find the .htaccess file in the website root directory and add the following code to it:

SetEnvIfNoCase User-Agent "^Googlebot" search_bot

This code is for Googlebot, but you can set a restriction for any search engine:

SetEnvIfNoCase User-Agent «^Yahoo» search_bot SetEnvIfNoCase User-Agent «^Robot» search_bot SetEnvIfNoCase User-Agent «^php» search_bot SetEnvIfNoCase User-Agent «^Snapbot» search_bot SetEnvIfNoCase User-Agent «^WordPress» search_bot

This rule allows you to block unwanted User Agents, which can be potentially dangerous or simply overload the server with unnecessary requests.

Set a website access password

The second way to prohibit site indexing with the help of .htaccess file is to create the password for accessing the site. Add this code to the .htaccess file:

AuthType Basic AuthName "Password Protected Area" AuthUserFile /home/user/www-auth/.htpasswd Require valid-user home/user/www-auth/.htpasswd

home/user/www-auth/.htpasswd — a file with a password that you have to set.

The password is set by the website owner who needs to identify himself by adding a username. Therefore, you need to add the user to the password file:

htpasswd -c /home/user/www-auth/.htpasswd USERNAME

As a result, the bot will not be able to crawl the website and index it.

Common indexing errors

Sometimes, Google cannot index a page, not only because you have restricted content indexing but also because of technical issues on the website.

Here are the 5 most common issues that prevent Google from indexing your pages.

Duplicate content

Having the same content on different pages of your website can negatively affect optimization efforts because your content isn’t unique. Since Google doesn’t know which URL to list higher in SERP, it might rank both URLs lower and give preference to other pages. Plus, suppose Google decides that your content is deliberately duplicated across domains in an attempt to manipulate search engine rankings. In that case, the website may not only lose position but also can be dropped from Google’s index. So, you’ll have to get rid of duplicate content on your site.

Let’s look at some steps you can take to avoid duplicate content issues.

- Set up redirects. Use 301 redirects in your .htaccess file to guide users and crawlers to proper pages whenever a website/URL changes.

- Work on the website structure. Make sure that the content does not overlap (common with blogs and forums). For example, a blog post may appear on the main page of a website, and an archive page.

- Minimize similar content. Your site likely has similar content on different pages that you need to get rid of. For example, the website has two separate pages with almost identical text. You need to either merge 2 pages into one page or create unique content for both pages.

- Use canonical tag. If you want to keep duplicate content on your website, Google recommends using rel=”canonical” link element. What canonical does is point the search engines to the main version of the page.

HTTP status code issues

Another problem that might prevent any website page from being crawled and indexed is an HTTP status issue. Website pages, files, or links are supposed to return the 200 status code. If they return other HTTP status codes, your website can experience indexing and ranking issues. Let’s look at the main types of response codes that can hurt your website indexing:

- 3XX response code indicates that there’s a redirect to another page. Each redirect puts an additional load on the server, so it’s better to remove unnecessary internal redirects. Redirects themselves do not negatively affect the indexing, but you should monitor their proper setting. Ideally, the number of 3XX pages on the website should not exceed 5% of the total number of pages. If their number is higher than 10%, that’s bad news for you. Review all redirects and remove some of them.

- 4XX response code indicates that the requested page cannot be accessed. When clicking on a non-existing URL, users will see that the page is missing. This harms the website indexing, which can lead to ranking drops. Plus, internal links to 4XX pages drain your crawl budget. To get rid of unnecessary 4XX URLs, review the list of such broken links and replace them with accessible pages, or just remove some of the links. Besides, to avoid 4XX errors, you can set up 301 redirects. Keep in mind: 404 pages can exist on your website, but if a user ends up seeing them, you have to make sure that they are well-thought-out. Thus, you can minimize reputational and usability damages.

- 5XX response code means the problem was caused by the server. Pages that return such codes are inaccessible both to website visitors and to search engines. As a result, the crawler cannot crawl and index such a broken page. To fix this issue, try reproducing the server error for these URLs through the browser and check the server’s error logs. What’s more, you can consult your hosting provider or web developer since your server may be misconfigured.

Internal linking issues

Internal links help crawlers scan websites, discover new pages, and index them faster. What problem can you face here? If some website pages don’t have any internal links pointing to them, search engines are unlikely to find and index this page. You can indicate them in the XML sitemap or via external links. But internal linking should not be ignored.

Make sure that your website’s most important pages have at least a couple of internal links pointing to them.

Keep in mind: all internal links should pass link juice—as in not be tagged with the rel=”nofollow” attribute. After all, using internal links in a smart way can even boost your rankings.

Blocked JavaScript, CSS, and Image Files

For optimal rendering and indexing, crawlers should be able to access your JavaScript, CSS, and image files. If you disallow the crawling of these files, it directly harms the indexing of your content. Let’s look at 3 steps that will help you to avoid this issue.

- To make sure the crawler accesses your CSS, JavaScript, and image files, use the URL Inspection tool in GSC. It provides information about Google’s indexed version of a specific page and shows how Googlebot sees your website content. So it can help you fix indexing issues.

- Check and test your robots.txt—check if all the directives are properly set up. You can do it with Google’s robots.txt tester.

- To see if Google detects your website’s mobile pages as compatible for visitors, use the Mobile-Friendly Test.

Slow-loading pages

It’s important to make sure your website loads quickly. Google doesn’t like slow-loading sites. As a result, they are indexed longer. Reasons for that can be different. For example, using outdated servers with limited resources or too overloaded pages for the user’s browser to process.

The best practice is to get your website to load in less than 2 to 3 seconds. Keep in mind that Core Web Vitals metrics, which measure and evaluate the speed, responsiveness, and visual stability of websites, are Google ranking factors.

To learn more about how to improve your site’s speed, read our blog post.

You can monitor all the issues by using special SEO tools—for example, SE Ranking’s Website Audit. To find out errors on the website, go to Issue report, which will provide you with a complete list of errors and recommendations for fixing them.

The report includes insights on issues related to:

- Website Security

- JavaScript, CSS

- Crawling

- Duplicate Content

- HTTP Status Code

- Title & Description

- Usability

- Website Speed

- Redirects

- Internal & External Links etc.

By fixing all the issues, you can improve the website indexing and increase its ranking in search results.

Conclusion

Getting your site crawled and indexed by Google is essential, but it does take a while for your web pages to appear in the SERP. With a thorough understanding of all the subtleties of search engine indexing, you will not hurt your website by making false steps.

If you set up and optimized your sitemap correctly, take into account technical search engine requirements, and make sure you have high-quality and useful content, Google won’t leave your website unattended.

In this blog post, we have discussed all the important indexing aspects:

- Notifying the search engine of a new website or page by means of creating a sitemap, special URL adding tools, and external links.

- Using a search engine operator, special tools, and scripts to check website indexing.

- The specifics of indexing websites that use Ajax, JavaScript, SAP, and frameworks.

- Restricting site indexing with the help of robots, meta tag, and access password.

- Common indexing errors: internal linking issues, duplicate content, slow loading pages, etc.

Keep in mind that a high indexing rate isn’t equal to high Google rankings. But it’s the basis for your further website optimization. So, before doing anything else, check your pages’ indexing status to verify that they can be indexed.